k8s 证书修复与更新

[toc]

复盘k8s证书到期故障状态,与集群快速恢复

在所有证有书更新之前,要确保kubelet-client-current.pem 这个证书自动更新的

kubelet-client-current.pem 特殊证书的更新

说明

生产中kubebetes 不能随便调整时间,在操作的时候要注意,保持当前时间。

引入k8s1.15 - 1.18 的证书坑点,读取kubernetes 源码,可以发现 kubernetes kubelet-client-current.pem kubelet 客户端证书的轮换机制

// jitteryDuration uses some jitter to set the rotation threshold so each node

// will rotate at approximately 70-90% of the total lifetime of the

// certificate. With jitter, if a number of nodes are added to a cluster at

// approximately the same time (such as cluster creation time), they won't all

// try to rotate certificates at the same time for the rest of the life of the

// cluster.

在生产中要确保以下

/var/lib/kubelet/config.yamlrotateCertificates: true配置参数是开启的 ,因为kubernetes kubelet-client-current.pem 这个证书有轮转机制,即每当这个证书到了即将到期的70%-90% 时间周期时,kubelet 客户端会向 kube-controller-manager-k8s-master 发启更新请求。那么意味着这个证书不能超过它自身期限的90%,而在生产环境,默认开启以下参数,会自动更新,即除master1 ,kubelet-client-current.pem证书按正常时间推进默认不需要人为介入更新master init 的节点会随着手动证书更新重启kubelet,kubelect client 证书也随着触发更新,而其它master ,node 这些join的节点 kubelet client 证书自动更新

- 生产中兜底方案,在当前时间到kubelet-client 证书有效期周期的 70% - 90% 范围时,调整生成证书年限为9年 重启kubelet 触发kubelet client 证书为9年后,再更新其它证书

cat /var/lib/kubelet/config.yaml

rotateCertificates: true ## 确保轮换机制开启

操作: 模拟故障复盘

三个 master 证书 变更配置

vim /etc/kubernetes/manifests/kube-controller-manager.yaml

spec:

containers:

- command:

- kube-controller-manager

- --experimental-cluster-signing-duration=87600h0m0s ## 新增内容

- --feature-gates=RotateKubeletServerCertificate=true ## 新增内容

逐个重启 controller

kubectl delete pod kube-controller-manager-k8s-master1 -n kube-system

kubectl delete pod kube-controller-manager-k8s-master2 -n kube-system

kubectl delete pod kube-controller-manager-k8s-master3 -n kube-system

时间调整到证书到期的70-90% 之间

master1 master2 master3 node1 执行

date -s "2022-05-20"

重启kubelet 获取证书

master1 master2 master3 node1 执行

systemctl restart kubelet

验证kubelet 期后是否有效

openssl x509 -in /var/lib/kubelet/pki/kubelet-client-current.pem -noout -dates

notBefore=May 19 15:57:00 2022 GMT

notAfter=Aug 10 14:35:09 2031 GMT

更改系统时间超前复现故障

三个master节点

date -s "2027-05-20"

至次模拟出复盘故障

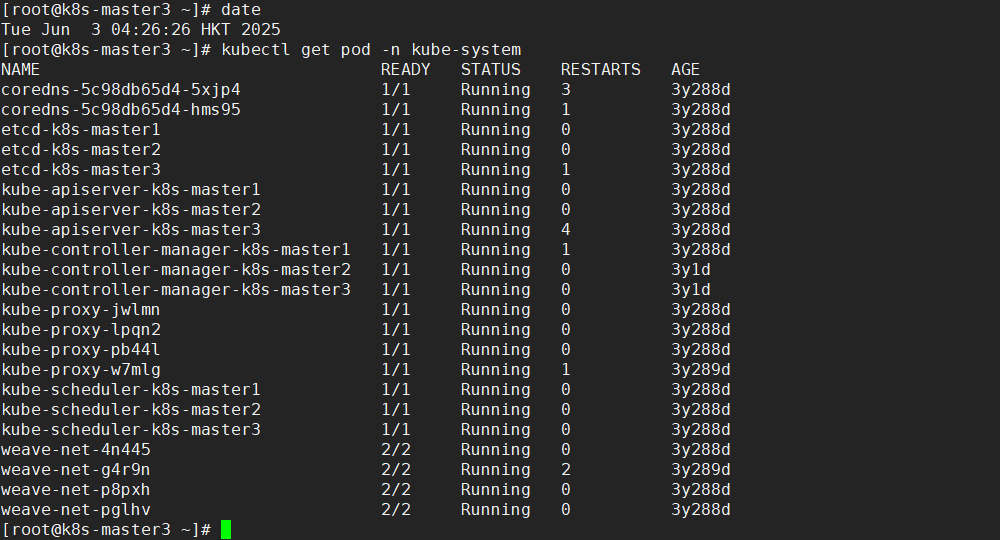

以下操作开始恢复集群

操作快速恢复

update-kubeadm-cert.sh 更新三个master 证书

将证书年限调整到 3285 即 9年

三个master均需执行

CAER_DAYS=3285 ## 更改日期到9年

./update-kubeadm-cert.sh all ## 更新证书

api-server 启动后,个别服务显示running 但不影响,尝试重启docker 与kubelet

vim clean.sh

#!/bin/bash

docker rm $(sudo docker ps -qf status=exited)

systemctl restart docker

systemctl restart kubelet

docker rm $(sudo docker ps -qf status=exited)

chmod +x clean.sh

bash clean.sh

不响应的组件,可以尝试清理让其再次自动修复

for i in `kubectl get pod -A -o wide | grep master3 | awk '{print $2}'` ; do kubectl delete pod $i -n kube-system ;done

for i in `kubectl get pod -A -o wide | grep master2 | awk '{print $2}'` ; do kubectl delete pod $i -n kube-system ;done

for i in `kubectl get pod -A -o wide | grep master1 | awk '{print $2}'` ; do kubectl delete pod $i -n kube-system ;done

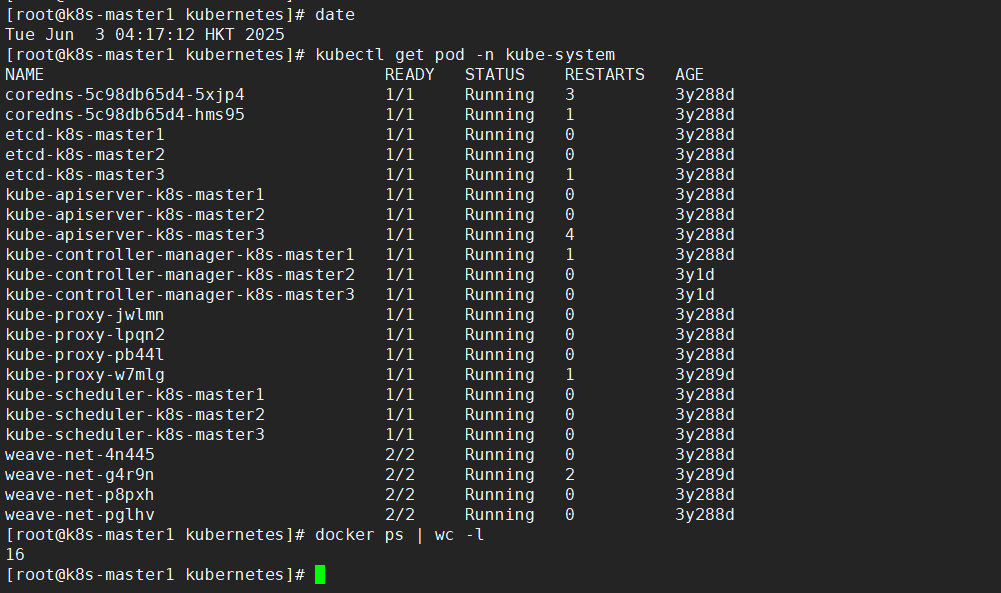

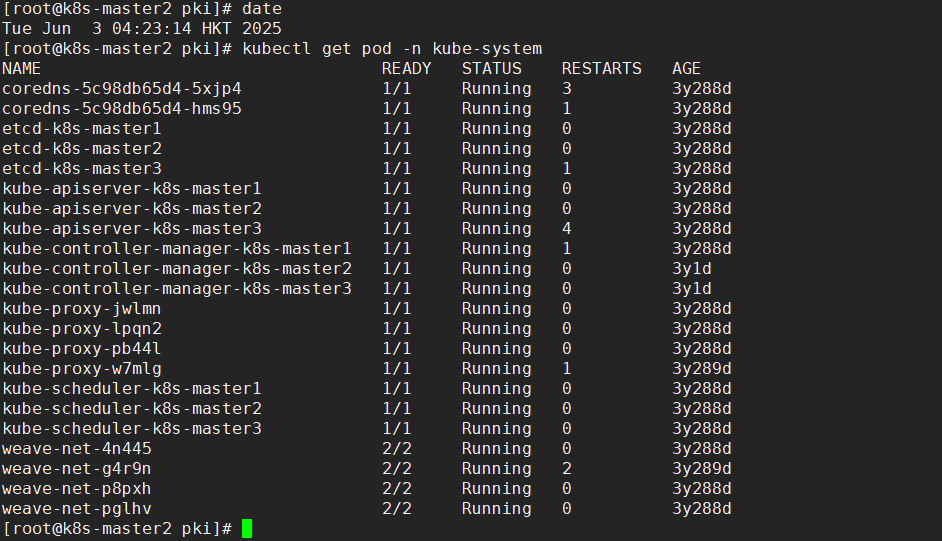

三个master恢复后,正常docker ps 可以统计到至少14个 pod

为防止k8s 组件etcd 飘到master 启动不了, 可以每个node 保留一份k8s 组件镜像,并打标存放,若是非故障更新证书,一定要提前在每个node 上pull k8s master节点相关组件,避免coredns 飘到node 启动不了造成故障;

############下载镜像

docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.15.0

docker pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.15.0

docker pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.15.0

docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.15.0

docker pull registry.aliyuncs.com/google_containers/pause:3.1

docker pull registry.aliyuncs.com/google_containers/etcd:3.3.10

docker pull registry.aliyuncs.com/google_containers/coredns:1.3.1

############给镜像打一个新的tag

docker tag registry.aliyuncs.com/google_containers/kube-apiserver:v1.15.0 k8s.gcr.io/kube-apiserver:v1.15.0

docker tag registry.aliyuncs.com/google_containers/kube-controller-manager:v1.15.0 k8s.gcr.io/kube-controller-manager:v1.15.0

docker tag registry.aliyuncs.com/google_containers/kube-scheduler:v1.15.0 k8s.gcr.io/kube-scheduler:v1.15.0

docker tag registry.aliyuncs.com/google_containers/kube-proxy:v1.15.0 k8s.gcr.io/kube-proxy:v1.15.0

docker tag registry.aliyuncs.com/google_containers/pause:3.1 k8s.gcr.io/pause:3.1

docker tag registry.aliyuncs.com/google_containers/etcd:3.3.10 k8s.gcr.io/etcd:3.3.10

docker tag registry.aliyuncs.com/google_containers/coredns:1.3.1 k8s.gcr.io/coredns:1.3.1

#############删除之前的镜像

docker rmi registry.aliyuncs.com/google_containers/kube-apiserver:v1.15.0

docker rmi registry.aliyuncs.com/google_containers/kube-controller-manager:v1.15.0

docker rmi registry.aliyuncs.com/google_containers/kube-scheduler:v1.15.0

docker rmi registry.aliyuncs.com/google_containers/kube-proxy:v1.15.0

docker rmi registry.aliyuncs.com/google_containers/pause:3.1

docker rmi registry.aliyuncs.com/google_containers/etcd:3.3.10

docker rmi registry.aliyuncs.com/google_containers/coredns:1.3.1

docker images

验证证书所有master 证书与服务响应

服务响应的验证要包涵在node 与 master 在内\

# date

Thu May 20 01:50:42 HKT 2027

# kubeadm alpha certs check-expiration

CERTIFICATE EXPIRES RESIDUAL TIME EXTERNALLY MANAGED

admin.conf Dec 16, 2032 16:29 UTC 5y no

apiserver Dec 16, 2032 16:29 UTC 5y no

apiserver-etcd-client Dec 16, 2032 16:29 UTC 5y no

apiserver-kubelet-client Dec 16, 2032 16:29 UTC 5y no

controller-manager.conf Dec 16, 2032 16:29 UTC 5y no

etcd-healthcheck-client Dec 16, 2032 16:29 UTC 5y no

etcd-peer Dec 16, 2032 16:29 UTC 5y no

etcd-server Dec 16, 2032 16:29 UTC 5y no

front-proxy-client Dec 16, 2032 16:29 UTC 5y no

scheduler.conf Dec 16, 2032 16:29 UTC 5y no

# curl -s 127.0.0.1:31404 | grep 'test'

<dd><a href="http://www.ssjinyao.com/test">Test Page<span>>></span></a></dd>

针对未故障状态证书到期续期

官方一年一续

- 三个master逐步执行

- 备份config etcd kuberntes 配置

mv $HOME/.kube/config $HOME/.kube/config-`date +"%Y-%m-%d-%H-%M-%S"`

cp -a /var/lib/etcd /var/lib/etcd-`date +"%Y-%m-%d-%H-%M-%S"`

cp -a /etc/kubernetes /etc/kubernetes-`date +"%Y-%m-%d-%H-%M-%S"`

- master 和node 都pull 并 tag 一份k8s组件,避免coredns 飘到node启不来

- 重新生成证书

kubeadm alpha certs renew all --config=kubeadm.yaml

kubeadm init phase kubeconfig all --config kubeadm.yaml

systemctl restart kubelet

- 重启相关组件

# docker ps | awk '/k8s_kube-apiserver/{print$1}' | xargs -r -I '{}' docker restart {} || true

# docker ps | awk '/k8s_kube-controller-manager/{print$1}' | xargs -r -I '{}' docker restart {} || true

# docker ps | awk '/k8s_kube-scheduler/{print$1}' | xargs -r -I '{}' docker restart {} || true

# docker ps | awk '/k8s_etcd/{print$1}' | xargs -r -I '{}' docker restart {} || true

- 更新admin config

# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# chown $(id -u):$(id -g) $HOME/.kube/config

脚本方式

三个 master 证书 变更配置

vim /etc/kubernetes/manifests/kube-controller-manager.yaml

spec:

containers:

- command:

- kube-controller-manager

- --experimental-cluster-signing-duration=87600h0m0s ## 新增内容

- --feature-gates=RotateKubeletServerCertificate=true ## 新增内容

逐个重启 controller

kubectl delete pod kube-controller-manager-k8s-master1 -n kube-system

kubectl delete pod kube-controller-manager-k8s-master2 -n kube-system

kubectl delete pod kube-controller-manager-k8s-master3 -n kube-system

master 和 node 重启kuebet 主动更新kuebelet client 证书到十年

systemctl restart kubelet

# 重启后没有生成 systemctl stop kubelet

- 三个master 逐步执行

- 备份config etcd kuberntes 配置

mv $HOME/.kube/config $HOME/.kube/config-`date +"%Y-%m-%d-%H-%M-%S"`

cp -a /var/lib/etcd /var/lib/etcd-`date +"%Y-%m-%d-%H-%M-%S"`

cp -a /etc/kubernetes /etc/kubernetes-`date +"%Y-%m-%d-%H-%M-%S"`

将证书年限调整到 3285 即 9年

证书更新五年期限

vim update-kubeadm-cert.sh #修改CAER_DAYS 变量为即为9年 3285逐步一台台master的执行

./update-kubeadm-cert.sh all kubeadm alpha certs check-expiration # 查看当前证书时间

补充

打标签

kubectl label nodes node13 role=code ## 打标签

kubectl get node --show-labels ## 查看标签

查看证书到期时间

kubeadm alpha certs check-expiration

image pull Error报错

部分因为pod 占用的名称一样,导致k8s组件启不来

docker rm $(docker ps -a | grep Exited| awk '{print $1}')

kubernetes组件调到node时

- 本地没有kubernetes组件镜像时会启不来,最好Node节点下本地pull 一份k8s的组件

############下载镜像

docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.15.0

docker pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.15.0

docker pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.15.0

docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.15.0

docker pull registry.aliyuncs.com/google_containers/pause:3.1

docker pull registry.aliyuncs.com/google_containers/etcd:3.3.10

docker pull registry.aliyuncs.com/google_containers/coredns:1.3.1

############给镜像打一个新的tag

docker tag registry.aliyuncs.com/google_containers/kube-apiserver:v1.15.0 k8s.gcr.io/kube-apiserver:v1.15.0

docker tag registry.aliyuncs.com/google_containers/kube-controller-manager:v1.15.0 k8s.gcr.io/kube-controller-manager:v1.15.0

docker tag registry.aliyuncs.com/google_containers/kube-scheduler:v1.15.0 k8s.gcr.io/kube-scheduler:v1.15.0

docker tag registry.aliyuncs.com/google_containers/kube-proxy:v1.15.0 k8s.gcr.io/kube-proxy:v1.15.0

docker tag registry.aliyuncs.com/google_containers/pause:3.1 k8s.gcr.io/pause:3.1

docker tag registry.aliyuncs.com/google_containers/etcd:3.3.10 k8s.gcr.io/etcd:3.3.10

docker tag registry.aliyuncs.com/google_containers/coredns:1.3.1 k8s.gcr.io/coredns:1.3.1

#############删除之前的镜像

docker rmi registry.aliyuncs.com/google_containers/kube-apiserver:v1.15.0

docker rmi registry.aliyuncs.com/google_containers/kube-controller-manager:v1.15.0

docker rmi registry.aliyuncs.com/google_containers/kube-scheduler:v1.15.0

docker rmi registry.aliyuncs.com/google_containers/kube-proxy:v1.15.0

docker rmi registry.aliyuncs.com/google_containers/pause:3.1

docker rmi registry.aliyuncs.com/google_containers/etcd:3.3.10

docker rmi registry.aliyuncs.com/google_containers/coredns:1.3.1

docker images

查看根证书的时间年限

一定要注意,根证书到期的话,多个master的证书都得换 ,有业务的的重要集群,最好是做好迁移

openssl x509 -in front-proxy-ca.crt -text -noout

openssl x509 -in ca.crt -text -noout

cd etcd && openssl x509 -in ca.crt -text -noout

附: 证书更新脚本

#!/bin/bash

set -o errexit

set -o pipefail

# set -o xtrace

log::err() {

printf "[$(date +'%Y-%m-%dT%H:%M:%S.%N%z')]: \033[31mERROR: \033[0m$@\n"

}

log::info() {

printf "[$(date +'%Y-%m-%dT%H:%M:%S.%N%z')]: \033[32mINFO: \033[0m$@\n"

}

log::warning() {

printf "[$(date +'%Y-%m-%dT%H:%M:%S.%N%z')]: \033[33mWARNING: \033[0m$@\n"

}

check_file() {

if [[ ! -r ${1} ]]; then

log::err "can not find ${1}"

exit 1

fi

}

# get x509v3 subject alternative name from the old certificate

cert::get_subject_alt_name() {

local cert=${1}.crt

check_file "${cert}"

local alt_name=$(openssl x509 -text -noout -in ${cert} | grep -A1 'Alternative' | tail -n1 | sed 's/[[:space:]]*Address//g')

printf "${alt_name}\n"

}

# get subject from the old certificate

cert::get_subj() {

local cert=${1}.crt

check_file "${cert}"

local subj=$(openssl x509 -text -noout -in ${cert} | grep "Subject:" | sed 's/Subject:/\//g;s/\,/\//;s/[[:space:]]//g')

printf "${subj}\n"

}

cert::backup_file() {

local file=${1}

if [[ ! -e ${file}.old-$(date +%Y%m%d) ]]; then

cp -rp ${file} ${file}.old-$(date +%Y%m%d)

log::info "backup ${file} to ${file}.old-$(date +%Y%m%d)"

else

log::warning "does not backup, ${file}.old-$(date +%Y%m%d) already exists"

fi

}

# generate certificate whit client, server or peer

# Args:

# $1 (the name of certificate)

# $2 (the type of certificate, must be one of client, server, peer)

# $3 (the subject of certificates)

# $4 (the validity of certificates) (days)

# $5 (the x509v3 subject alternative name of certificate when the type of certificate is server or peer)

cert::gen_cert() {

local cert_name=${1}

local cert_type=${2}

local subj=${3}

local cert_days=${4}

local alt_name=${5}

local cert=${cert_name}.crt

local key=${cert_name}.key

local csr=${cert_name}.csr

local csr_conf="distinguished_name = dn\n[dn]\n[v3_ext]\nkeyUsage = critical, digitalSignature, keyEncipherment\n"

check_file "${key}"

check_file "${cert}"

# backup certificate when certificate not in ${kubeconf_arr[@]}

# kubeconf_arr=("controller-manager.crt" "scheduler.crt" "admin.crt" "kubelet.crt")

# if [[ ! "${kubeconf_arr[@]}" =~ "${cert##*/}" ]]; then

# cert::backup_file "${cert}"

# fi

case "${cert_type}" in

client)

openssl req -new -key ${key} -subj "${subj}" -reqexts v3_ext \

-config <(printf "${csr_conf} extendedKeyUsage = clientAuth\n") -out ${csr}

openssl x509 -in ${csr} -req -CA ${CA_CERT} -CAkey ${CA_KEY} -CAcreateserial -extensions v3_ext \

-extfile <(printf "${csr_conf} extendedKeyUsage = clientAuth\n") -days ${cert_days} -out ${cert}

log::info "generated ${cert}"

;;

server)

openssl req -new -key ${key} -subj "${subj}" -reqexts v3_ext \

-config <(printf "${csr_conf} extendedKeyUsage = serverAuth\nsubjectAltName = ${alt_name}\n") -out ${csr}

openssl x509 -in ${csr} -req -CA ${CA_CERT} -CAkey ${CA_KEY} -CAcreateserial -extensions v3_ext \

-extfile <(printf "${csr_conf} extendedKeyUsage = serverAuth\nsubjectAltName = ${alt_name}\n") -days ${cert_days} -out ${cert}

log::info "generated ${cert}"

;;

peer)

openssl req -new -key ${key} -subj "${subj}" -reqexts v3_ext \

-config <(printf "${csr_conf} extendedKeyUsage = serverAuth, clientAuth\nsubjectAltName = ${alt_name}\n") -out ${csr}

openssl x509 -in ${csr} -req -CA ${CA_CERT} -CAkey ${CA_KEY} -CAcreateserial -extensions v3_ext \

-extfile <(printf "${csr_conf} extendedKeyUsage = serverAuth, clientAuth\nsubjectAltName = ${alt_name}\n") -days ${cert_days} -out ${cert}

log::info "generated ${cert}"

;;

*)

log::err "unknow, unsupported etcd certs type: ${cert_type}, supported type: client, server, peer"

exit 1

esac

rm -f ${csr}

}

cert::update_kubeconf() {

local cert_name=${1}

local kubeconf_file=${cert_name}.conf

local cert=${cert_name}.crt

local key=${cert_name}.key

# generate certificate

check_file ${kubeconf_file}

# get the key from the old kubeconf

grep "client-key-data" ${kubeconf_file} | awk {'print$2'} | base64 -d > ${key}

# get the old certificate from the old kubeconf

grep "client-certificate-data" ${kubeconf_file} | awk {'print$2'} | base64 -d > ${cert}

# get subject from the old certificate

local subj=$(cert::get_subj ${cert_name})

cert::gen_cert "${cert_name}" "client" "${subj}" "${CAER_DAYS}"

# get certificate base64 code

local cert_base64=$(base64 -w 0 ${cert})

# backup kubeconf

# cert::backup_file "${kubeconf_file}"

# set certificate base64 code to kubeconf

sed -i 's/client-certificate-data:.*/client-certificate-data: '${cert_base64}'/g' ${kubeconf_file}

log::info "generated new ${kubeconf_file}"

rm -f ${cert}

rm -f ${key}

# set config for kubectl

if [[ ${cert_name##*/} == "admin" ]]; then

mkdir -p ${HOME}/.kube

local config=${HOME}/.kube/config

local config_backup=${HOME}/.kube/config.old-$(date +%Y%m%d)

if [[ -f ${config} ]] && [[ ! -f ${config_backup} ]]; then

cp -fp ${config} ${config_backup}

log::info "backup ${config} to ${config_backup}"

fi

cp -fp ${kubeconf_file} ${HOME}/.kube/config

log::info "copy the admin.conf to ${HOME}/.kube/config for kubectl"

fi

}

cert::update_etcd_cert() {

PKI_PATH=${KUBE_PATH}/pki/etcd

CA_CERT=${PKI_PATH}/ca.crt

CA_KEY=${PKI_PATH}/ca.key

check_file "${CA_CERT}"

check_file "${CA_KEY}"

# generate etcd server certificate

# /etc/kubernetes/pki/etcd/server

CART_NAME=${PKI_PATH}/server

subject_alt_name=$(cert::get_subject_alt_name ${CART_NAME})

cert::gen_cert "${CART_NAME}" "peer" "/CN=etcd-server" "${CAER_DAYS}" "${subject_alt_name}"

# generate etcd peer certificate

# /etc/kubernetes/pki/etcd/peer

CART_NAME=${PKI_PATH}/peer

subject_alt_name=$(cert::get_subject_alt_name ${CART_NAME})

cert::gen_cert "${CART_NAME}" "peer" "/CN=etcd-peer" "${CAER_DAYS}" "${subject_alt_name}"

# generate etcd healthcheck-client certificate

# /etc/kubernetes/pki/etcd/healthcheck-client

CART_NAME=${PKI_PATH}/healthcheck-client

cert::gen_cert "${CART_NAME}" "client" "/O=system:masters/CN=kube-etcd-healthcheck-client" "${CAER_DAYS}"

# generate apiserver-etcd-client certificate

# /etc/kubernetes/pki/apiserver-etcd-client

check_file "${CA_CERT}"

check_file "${CA_KEY}"

PKI_PATH=${KUBE_PATH}/pki

CART_NAME=${PKI_PATH}/apiserver-etcd-client

cert::gen_cert "${CART_NAME}" "client" "/O=system:masters/CN=kube-apiserver-etcd-client" "${CAER_DAYS}"

# restart etcd

docker ps | awk '/k8s_etcd/{print$1}' | xargs -r -I '{}' docker restart {} || true

log::info "restarted etcd"

}

cert::update_master_cert() {

PKI_PATH=${KUBE_PATH}/pki

CA_CERT=${PKI_PATH}/ca.crt

CA_KEY=${PKI_PATH}/ca.key

check_file "${CA_CERT}"

check_file "${CA_KEY}"

# generate apiserver server certificate

# /etc/kubernetes/pki/apiserver

CART_NAME=${PKI_PATH}/apiserver

subject_alt_name=$(cert::get_subject_alt_name ${CART_NAME})

cert::gen_cert "${CART_NAME}" "server" "/CN=kube-apiserver" "${CAER_DAYS}" "${subject_alt_name}"

# generate apiserver-kubelet-client certificate

# /etc/kubernetes/pki/apiserver-kubelet-client

CART_NAME=${PKI_PATH}/apiserver-kubelet-client

cert::gen_cert "${CART_NAME}" "client" "/O=system:masters/CN=kube-apiserver-kubelet-client" "${CAER_DAYS}"

# generate kubeconf for controller-manager,scheduler,kubectl and kubelet

# /etc/kubernetes/controller-manager,scheduler,admin,kubelet.conf

cert::update_kubeconf "${KUBE_PATH}/controller-manager"

cert::update_kubeconf "${KUBE_PATH}/scheduler"

cert::update_kubeconf "${KUBE_PATH}/admin"

# check kubelet.conf

# https://github.com/kubernetes/kubeadm/issues/1753

set +e

grep kubelet-client-current.pem /etc/kubernetes/kubelet.conf > /dev/null 2>&1

kubelet_cert_auto_update=$?

set -e

if [[ "$kubelet_cert_auto_update" == "0" ]]; then

log::warning "does not need to update kubelet.conf"

else

cert::update_kubeconf "${KUBE_PATH}/kubelet"

fi

# generate front-proxy-client certificate

# use front-proxy-client ca

CA_CERT=${PKI_PATH}/front-proxy-ca.crt

CA_KEY=${PKI_PATH}/front-proxy-ca.key

check_file "${CA_CERT}"

check_file "${CA_KEY}"

CART_NAME=${PKI_PATH}/front-proxy-client

cert::gen_cert "${CART_NAME}" "client" "/CN=front-proxy-client" "${CAER_DAYS}"

# restart apiserve, controller-manager, scheduler and kubelet

docker ps | awk '/k8s_kube-apiserver/{print$1}' | xargs -r -I '{}' docker restart {} || true

log::info "restarted kube-apiserver"

docker ps | awk '/k8s_kube-controller-manager/{print$1}' | xargs -r -I '{}' docker restart {} || true

log::info "restarted kube-controller-manager"

docker ps | awk '/k8s_kube-scheduler/{print$1}' | xargs -r -I '{}' docker restart {} || true

log::info "restarted kube-scheduler"

systemctl restart kubelet

log::info "restarted kubelet"

}

main() {

local node_tpye=$1

KUBE_PATH=/etc/kubernetes

CAER_DAYS=3285

case ${node_tpye} in

# etcd)

# # update etcd certificates

# cert::update_etcd_cert

# ;;

master)

# backup $KUBE_PATH to $KUBE_PATH.old-$(date +%Y%m%d)

cert::backup_file "${KUBE_PATH}"

# update master certificates and kubeconf

cert::update_master_cert

;;

all)

# backup $KUBE_PATH to $KUBE_PATH.old-$(date +%Y%m%d)

cert::backup_file "${KUBE_PATH}"

# update etcd certificates

cert::update_etcd_cert

# update master certificates and kubeconf

cert::update_master_cert

;;

*)

log::err "unknow, unsupported certs type: ${node_tpye}, supported type: all, master"

printf"

example:

'\033[32m./update-kubeadm-cert.sh all\033[0m' update all etcd certificates, master certificates and kubeconf

/etc/kubernetes

├── admin.conf

├── controller-manager.conf

├── scheduler.conf

├── kubelet.conf

└── pki

├── apiserver.crt

├── apiserver-etcd-client.crt

├── apiserver-kubelet-client.crt

├── front-proxy-client.crt

└── etcd

├── healthcheck-client.crt

├── peer.crt

└── server.crt

'\033[32m./update-kubeadm-cert.sh master\033[0m' update only master certificates and kubeconf

/etc/kubernetes

├── admin.conf

├── controller-manager.conf

├── scheduler.conf

├── kubelet.conf

└── pki

├── apiserver.crt

├── apiserver-kubelet-client.crt

└── front-proxy-client.crt

"

exit 1

esac

}

main "$@"

测试环境可更改年限超前验证集群可用性

master1

master2

master3

# curl -s 127.0.0.1:31404 | grep 'test'

<dd><a href="http://www.ssjinyao.com/test">Test Page<span>>></span></a></dd>

附:后续工作中遇到集群证书过期

- 补充一下该脚本的执行输出结果;

[2024-01-02T17:08:03.517506065+0800]: INFO: backup /etc/kubernetes to /etc/kubernetes.old-20240102

Signature ok

subject=/CN=etcd-server

Getting CA Private Key

[2024-01-02T17:08:03.623833545+0800]: INFO: generated /etc/kubernetes/pki/etcd/server.crt

Signature ok

subject=/CN=etcd-peer

Getting CA Private Key

[2024-01-02T17:08:03.669417879+0800]: INFO: generated /etc/kubernetes/pki/etcd/peer.crt

Signature ok

subject=/O=system:masters/CN=kube-etcd-healthcheck-client

Getting CA Private Key

[2024-01-02T17:08:03.695522405+0800]: INFO: generated /etc/kubernetes/pki/etcd/healthcheck-client.crt

Signature ok

subject=/O=system:masters/CN=kube-apiserver-etcd-client

Getting CA Private Key

[2024-01-02T17:08:03.722051373+0800]: INFO: generated /etc/kubernetes/pki/apiserver-etcd-client.crt

b9f7b1a762d2

[2024-01-02T17:08:04.330615364+0800]: INFO: restarted etcd

Signature ok

subject=/CN=kube-apiserver

Getting CA Private Key

[2024-01-02T17:08:04.375020513+0800]: INFO: generated /etc/kubernetes/pki/apiserver.crt

Signature ok

subject=/O=system:masters/CN=kube-apiserver-kubelet-client

Getting CA Private Key

[2024-01-02T17:08:04.402700491+0800]: INFO: generated /etc/kubernetes/pki/apiserver-kubelet-client.crt

Signature ok

subject=/CN=system:kube-controller-manager

Getting CA Private Key

[2024-01-02T17:08:04.470952486+0800]: INFO: generated /etc/kubernetes/controller-manager.crt

[2024-01-02T17:08:04.481956357+0800]: INFO: generated new /etc/kubernetes/controller-manager.conf

Signature ok

subject=/CN=system:kube-scheduler

Getting CA Private Key

[2024-01-02T17:08:04.631991064+0800]: INFO: generated /etc/kubernetes/scheduler.crt

[2024-01-02T17:08:04.643149234+0800]: INFO: generated new /etc/kubernetes/scheduler.conf

Signature ok

subject=/O=system:masters/CN=kubernetes-admin

Getting CA Private Key

[2024-01-02T17:08:04.696606019+0800]: INFO: generated /etc/kubernetes/admin.crt

[2024-01-02T17:08:04.774055697+0800]: INFO: generated new /etc/kubernetes/admin.conf

[2024-01-02T17:08:04.806305103+0800]: INFO: backup /root/.kube/config to /root/.kube/config.old-20240102

[2024-01-02T17:08:04.810521622+0800]: INFO: copy the admin.conf to /root/.kube/config for kubectl

[2024-01-02T17:08:04.814804123+0800]: WARNING: does not need to update kubelet.conf

Signature ok

subject=/CN=front-proxy-client

Getting CA Private Key

[2024-01-02T17:08:04.843010074+0800]: INFO: generated /etc/kubernetes/pki/front-proxy-client.crt

a992d9f3df88

[2024-01-02T17:08:09.644624810+0800]: INFO: restarted kube-apiserver

fd321709db1a

[2024-01-02T17:08:10.474198268+0800]: INFO: restarted kube-controller-manager

e1f71d3acbff

[2024-01-02T17:08:11.037594302+0800]: INFO: restarted kube-scheduler

[2024-01-02T17:08:11.561803226+0800]: INFO: restarted kubelet