CL210-OpenStack实验手册

介绍红帽OpenStack平台架构

环境介绍

- 红帽课程中使用 power 节点模拟虚拟机电源管理;

- power 节点上有一个 CLI 接口,创建虚拟主板管理控制器(BMC),通过 hypervisor 来使用IPMI 协议管理每个 RHOSP 虚拟机,以模拟裸机管理方式;

- 每台虚拟机都需要一个虚拟 BMC,各自在 623 端口上监听power 节点的 BMC 配置.

如下所示

[root@power ~]# netstat -tulnp | grep 623

udp 0 0 172.25.249.112:623 0.0.0.0:* 1259/python

udp 0 0 172.25.249.106:623 0.0.0.0:* 1246/python

udp 0 0 172.25.249.103:623 0.0.0.0:* 1233/python

udp 0 0 172.25.249.102:623 0.0.0.0:* 1220/python

udp 0 0 172.25.249.101:623 0.0.0.0:* 1207/python

[root@power ~]# cat /etc/bmc/vms

controller0,172.25.249.101

compute0,172.25.249.102

ceph0,172.25.249.103

computehci0,172.25.249.106

compute1,172.25.249.112

#远程控制开关机

(undercloud) [stack@director ~]$ ipmitool -I lanplus -U admin -P password -H 172.25.249.112 power on

- 查看

undercloud的部署镜像

(undercloud) [stack@director ~]$ openstack image list

+--------------------------------------+------------------------+--------+

| ID | Name | Status |

+--------------------------------------+------------------------+--------+

| fab32297-d1e2-4598-9e4e-6b02c8982c6f | bm-deploy-kernel | active |

| bc8408a7-3074-4c56-8992-a56637f561e0 | bm-deploy-ramdisk | active |

| ff82c9a3-eead-489f-a862-ca7b2b245a60 | overcloud-full | active |

| 86901767-c252-4711-867e-a06eb52b4bfc | overcloud-full-initrd | active |

| 4b823922-4757-468f-aad4-1e7bf0f585fa | overcloud-full-vmlinuz | active |

+--------------------------------------+------------------------+--------+

bm-deploy-kernel和bm-deploy-ramdisk是用来引导操作系统启动的镜像,overcloud中的服务器 第一次启动就会使用这两个镜像,做服务器体检,获得服务器的信息,交给ironic服务的数据库,然后将服务器关机.- 系统第二次启动会使用

overcloud-full-initrd和overcloud-full-vmlinuz,将服务器再次启动. - 服务器启动后,在硬盘上注入

overcloud-full这个镜像,然后根据ks脚本将服务器部署成为是控制 节点还是计算节点。overcloud-full镜像不仅包含了系统,还包含了ceph和openstack所有的软件 包,根据heat编排,将各节点部署成为各个角色。

OpenStack 服务组件通信故障排除方法

- 通过查看

Keystone日志文件定位故障:/var/log/contaners/keystone/keystone.log - 通过查看

RabbitMQ消息代理定位故障(oslo) - 通过查看各服务的

API日志信息定位故障:/var/log/containers/<service_name>/api.log - 通过查看各服务组件的日志信息定位故障

# openstack catalog service_name --debug

# 举例:

# openstack catalog nova --debug

# openstack catalog show nova --debug

描述openstack控制平面

描述API服务

RabbitMQ消息队列

rabbitmqctl 命令 :

- 因为 OpenStack 已经容器化运行,所有的 RabbitMQ 命令必须 在容器内部运行典型的 rabbitmqctl 命令使用如下:

rabbitmqctl report: # 查看 RabbitMQ 守护进程的当前状态,包括 Exchange 与 Queue 的类型 及数量

rabbitmqctl list_users: # 列出 RabbitMQ 用户

rabbitmqctl list_user_permissions guest # 列出guest用户的权限

rabbitmqctl list_exchanges:列出 RabbitMQ # 守护进程的默认配置的 Exchange rabbitmqctl list_queues: # 列出可用的队列及其属性

rabbitmqctl list_consumers: # 列出所有的消费者及它们订阅的队列

- 追踪RabbitMQ消息,跟踪会增加系统负载,因此请确保在调查完成后禁用跟踪:

# rabbitmqctl trace_on: # 启用追踪

# rabbitmqctl trace_off: # 禁用追踪

- 查看容器中rabbitmq的相关信息

[root@controller0 ~]# docker ps | grep rabbit

[root@controller0 ~]# docker container exec rabbitmq-bundle-docker-0 rabbitmqctl report

[root@controller0 ~]# docker container exec rabbitmq-bundle-docker-0

rabbitmqctl list_users

[root@controller0 ~]# docker container exec rabbitmq-bundle-docker-0

rabbitmqctl list_exchanges

[root@controller0 ~]# docker container exec rabbitmq-bundle-docker-0

rabbitmqctl list_queues

[root@controller0 ~]# docker container exec rabbitmq-bundle-docker-0

rabbitmqctl list_consumers

练习:开启容器中RabbitMQ的追踪服务

- 准备工作

[student@workstation ~]$ lab controlplane-message setup

- 开始测试

[root@controller0 ~]# docker exec -t rabbitmq-bundle-docker-0 rabbitmqctl

--help

[root@controller0 ~]# docker exec -t rabbitmq-bundle-docker-0 rabbitmqctl

--help | grep add

#创建用户并赋权

[root@controller0 ~]# docker exec -t rabbitmq-bundle-docker-0 rabbitmqctl add_user user1 redhat

[root@controller0 ~]# docker exec -t rabbitmq-bundle-docker-0 rabbitmqctl set_permissions user1 ".*" ".*" ".*"

()[root@controller0 /]# rabbitmqctl set_user_tags user1 administrator

#开启追踪功能

[root@controller0 ~]# docker exec -t rabbitmq-bundle-docker-0 rabbitmqctl trace_on

#查看rabbitmq服务监听在5672端口

[root@controller0 ~]# ss-tulnp | grep :5672

#测试追踪服务

[root@controller0 ~]# ./rmq_trace.py -u user1 -p redhat -t 172.24.1.1 >

/tmp/rabbit.user

[student@workstation ~]$ source developer1-finance-rc

[student@workstation~(developer1-finance)]$ openstack server create --image

rhel7 --flavor default --security-group default --key-name example-keypair -

-nic net-id=finance-network1 finance-server2 --wait

#关闭追踪功能

[root@controller0 ~]# docker exec -t rabbitmq-bundle-docker-0 rabbitmqctl

trace_off

- 清空环境

[student@workstation ~]$ lab controlplane-message cleanup

- 说明

设置用户对何种资源具有配置、写、读的权限通过正则表达式来匹配,这里的资源指的是virtual hosts中的exchanges、queues等,操作包括对资源进行配置、写、读。配置权限可创建、删除、资源并修改资源的行为,写权限可向资源发送消息,读权限从资源获取消息。

如'^(amq\.gen.*|amq\.default)$'可以匹配server生成的和默认的exchange,'^$'不匹配任 何资源。set_permissions[-p<vhostpath>]<user><conf><write><read>

控制节点的服务

- 初始化环境

[student@workstation ~]$ lab controlplane-services setup

练习,访问mysql数据库服务

[root@controller0 ~]# docker ps | grep galera

c1d8d762c9ef 172.25.249.200:8787/rhosp13/openstack-mariadb:pcmklatest

"/bin/bash /usr/lo..." 28 hours ago Up 28 hours

galera-bundle-docker-0

[root@controller0 ~]# docker exec -t galera-bundle-docker-0 mysql -u root -e

"show databases"

[root@controller0 ~]# docker exec -it galera-bundle-docker-0 /bin/bash

()[root@controller0 /]# mysql -u root

MariaDB [(none)]> use keystone;

MariaDB [keystone]> show tables;

MariaDB [keystone]> select * from role;

- 查找数据库密码

[root@controller0 ~]# cd /var/lib/config-data/puppet-generated/mysql/root/

[root@controller0 root]# ls -a

. .. .my.cnf

[root@controller0 root]# vim .my.cnf

[root@controller0 root]# cat .my.cnf

[client]

user=root

password="dgMgg8QfNM"

[mysql]

user=root

password="dgMgg8QfNM"

[root@controller0 ~]# ss -tnlp | grep 3306

LISTEN 0 128 172.24.1.1:3306 *:*

users:(("mysqld",pid=15583,fd=33))

LISTEN 0 128 172.24.1.50:3306 *:*

users:(("haproxy",pid=14538,fd=26))

#用password="dgMgg8QfNM"登录数据库

[root@controller0 ~]# mysql -uroot -pdgMgg8QfNM -h172.24.1.1 MariaDB [(none)]> show databases;

查询redis数据库的密码并登录

#查看容器的信息

[root@controller0 ~]# docker inspect redis-bundle-docker-0

[root@controller0 ~]# grep -i pass /var/lib/config-data/puppet- generated/redis/etc/redis.conf

或者

[root@controller0 ~]# netstat -tulnp | grep redis

tcp 0 0 172.24.1.1:6379 0.0.0.0:* LISTEN

16352/redis-server

[root@controller0 ~]# redis-cli -h 172.24.1.1

172.24.1.1:6379> AUTH wuTuATnYuMrzJQGj8KxBb6ZAW

OK

172.24.1.1:6379> KEYS *

172.24.1.1:6379> type gnocchi-config

hash

172.24.1.1:6379> type _tooz_beats:05a78b9e-5846-42a8-a8a8-1bf3abddea79

string

- 查看集群

[root@controller0 ~]# pcs resource show

[root@controller0 ~]# pcs resource disable openstack-manila-share

[root@controller0 ~]# pcs resource enable openstack-manila-share

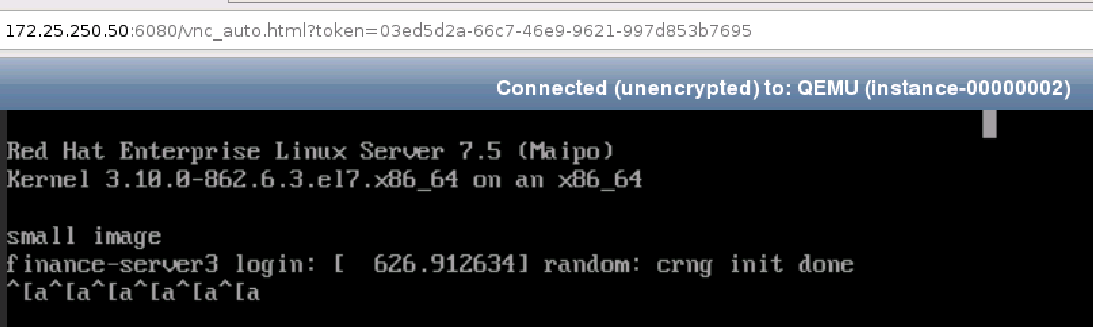

查看vnc登录的地址

[student@workstation ~]$ source developer1-finance-rc

[student@workstation ~(developer1-finance)]$ openstack console url show

finance-server3

+-------+------------------------------------------------------------------- -----------------+

|Field|Value|

+-------+------------------------------------------------------------------- -----------------+

|type |novnc|

| url | http://172.25.250.50:6080/vnc_auto.html?token=03ed5d2a-66c7-46e9- 9621-997d853b7695 |

+-------+------------------------------------------------------------------- -----------------+

#在控制节点上看监听端口

[root@controller0 ~]# netstat -tulnp | grep 6080

[root@foundation0~]#firefoxhttp://172.25.250.50:6080/vnc_auto.html?token=03ed5d2a-66c7-46e9-9621-997d853b7695

- 清空环境

[student@workstation ~]$ lab control plane-services cleanup

身份认证管理

管理集成的IdM后端配置

#管理员身份查看用户和域信息

(undercloud) [stack@director ~]$ source overcloudrc

(overcloud) [stack@director ~]$ openstack domain list

(overcloud) [stack@director ~]$ openstack user list --domain Example

#查看用户在当前域的token信息

[student@workstation ~]$ source developer1-finance-rc [student@workstation ~(developer1-finance)]$ openstack token issue

- 练习:配置dashboard可以登录其他域的用户

- 配置 Horizon 控制面板

- 默认情况下,身份认证服务使用 default 域

- 集成外部认证后端时需要单独创建后端的认证域

- 集成 IdM 认证后端时,需要在控制节点 Horizon 控制面板中启用 multidomain 支持特性 如下所示:

[root@controller0 ~]# vim /var/lib/config-data/puppet-

generated/horizon/etc/openstack-dashboard/local_settings

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

[root@controller0 ~]# docker restart horizon

- 配置身份认证服务:

- 编辑 Keystone 配置文件使 dashboard 控制面板支持multidomain 特性

如下所示:

[root@controller0 ~]# vim /var/lib/config-

data/puppetgenerated/keystone/etc/keystone/keystone.conf

[identity]

domain_specific_drivers_enabled = true

[root@controller0 ~]# docker restart keystone

管理身份服务令牌

- 练习:轮转key

参考文档链接 ,此链接只可以在红帽openstack 的练习环境中打开.

#查看轮转前

[root@controller0 ~]# ls /var/lib/config-data/puppet- generated/keystone/etc/keystone/fernet-keys

0 1

#轮转可以

[stack@director ~]$ source ~/stackrc

[stack@director ~]$ openstack workflow execution create tripleo.fernet_keys.v1.rotate_fernet_keys '{"container": "overcloud"}'

[stack@director ~]$ openstack workflow execution show 58c9c664-b966-4f82- b368-af5ed8de5b47

#查看轮转后

[root@controller0 ~]# ls /var/lib/config-data/puppet- generated/keystone/etc/keystone/fernet-keys

0 1 2

管理项目组织

- 域 Domain:

- Keystone v3(Identity Service v3)的新特性

- 域包含用户、组与项目

- RHOSP 初始安装了 Default 域

$ openstack domain create <domain>: # 创建额外的域

$ openstack domain list: # 列出已有的域

- 用户 User:

- 管理员应该保留 Default 域用于服务账户的使用

- 管理员可以创建新的域,并加入用户

$ openstack user create <user> --domain <domain> --password-prompt

说明:指定域添加新的用户,不指定 —domain 选项时,默认为 Default domain ;不建议命令行中指 定密码,存在安全风险,建议交互式输入密码 。

- 组 Group:

- Keystone v3 还引入了组的概念

- 组有利于批量管理用户访问资源

- 管理员可以对组执行单一操作,并将其作用分发给组中的所有成员

$ openstack group create <group> --domain<domain>: # 指定域创建组

$ openstack group adduser --group-domain <group_domain> --user-domain

<user_domain> <group> <user>

将域中的用户添加到域中的组中

项目 Project:

- Keystone v3 引入,用户不再包含于项目中,而是包含于域中,因此用户可从属于多个项目

$ openstack project create <project> --domain <domain>: # 指定域创建项目

- 角色管理:

- 身份认证服务使用基于角色的访问控制(RBAC)来认证授权用户访问 OpenStack

- 管理员可为用户与组分配角色来授权访问域与项目

$ openstack role add --domain Example --user admin admin

# 为 Default 域中的 admin 用户授予 Example 域的 admin 角色

$ openstack role add --project research --project-domain Example --group developers --group-domain Lab _member_

# 为 Lab 域的 developers 组授予 Example 域中 research 项目的_member_ 角色

- 用户存放在IDM中

[root@foundation0 ~]# ssh utility

[student@utility ~]$ kinit admin

Password for admin@LAB.EXAMPLE.NET: RedHat123^

[student@utility ~]$ ipa user-find | grep User

User login: admin

User login: architect1

User login: architect2

User login: architect3

...省略...

[student@utility ~]$ ipa group-find | grep Group

Group name: admins

Group name: consulting-admins

Group name: consulting-members

Group name: consulting-swiftoperators

Group name: editors ...省略...

练习:配置用户并赋予角色

创建 OpenStack 域 210Demo,其中包含 Engineering 与 Production项目

- 在域 210Demo 中创建 OpenStack 组 Devops,其中需包含以下用户:

- a. Robert 用户是 Engineering 项目的用户与管理员,email 地址为: Robert@lab.example.com。

- b. George 用户是 Engineering 项目的用户,email 地址为:George@lab.example.com。 c. William 用户是 Production 项目的用户与管理员,email 地址为: William@lab.example.com。

- d. John 用户是 Production 项目的用户,email 地址为:John@lab.example.com。 所有用户账户的密码都是 redhat

- 登录

ssh stack@director

(undercloud) [stack@director ~]$ source ~/overcloudrc

- 创建域

(overcloud) [stack@director ~]$ openstack domain create 210Demo

- 创建项目

(overcloud) [stack@director ~]$ openstack project create Engineering --domain 210Demo

(overcloud) [stack@director ~]$ openstack project create Production --domain 210Demo

(overcloud) [stack@director ~]$ openstack projectlist --domain 210Demo

- 创建组

(overcloud) [stack@director ~]$ openstack group create Devops --domain 210Demo

- 创建用户

(overcloud) [stack@director ~]$ openstack user create Robert --domain 210Demo --password redhat --project Engineering --email robert@lab.example.com

(overcloud) [stack@director ~]$ openstack user create George --domain 210Demo --password redhat --project Engineering --email george@lab.example.com

(overcloud) [stack@director ~]$ openstack user create William --domain 210Demo --password redhat --project Production --email william@lab.example

(overcloud) [stack@director ~]$ openstack user create John --domain 210Demo --password redhat --project Production --email john@lab.example

- 给用户赋予角色

(overcloud) [stack@director ~]$ openstack role add --user Robert --user-domain 210Demo --project Engineering --project-domain 210Demo admin

(overcloud) [stack@director ~]$ openstack role add --user Robert --user-domain 210Demo --project Engineering --project-domain 210Demo _member_ (overcloud) [stack@director ~]$ openstack role add --user George --user-domain 210Demo --project Engineering --project-domain 210Demo _member_

(overcloud) [stack@director ~]$ openstack role add --user William --user-domain 210Demo --project Production --project-domain 210Demo admin

(overcloud) [stack@director ~]$ openstack role add --user William --user-domain 210Demo --project Production --project-domain 210Demo _member_

(overcloud) [stack@director ~]$ openstack role add --user John --user-domain 210Demo --project Production --project-domain 210Demo _member_

#验证

(overcloud) [stack@director ~]$ openstack role assignment list --user Robert --user-domain 210Demo --project Engineering --project-domain 210Demo --names (overcloud) [stack@director ~]$ openstack role assignment list --user George --user-domain 210Demo --project Engineering --project-domain 210Demo --names (overcloud) [stack@director ~]$ openstack role assignment list --user William --user-domain 210Demo --project Production --project-domain 210Demo --names

(overcloud) [stack@director ~]$ openstack role assignment list --user John - -user-domain 210Demo --project Production --project-domain 210Demo --names

- 添加用户到组

openstack group add user --group-domain 210Demo --user-domain 210Demo Devops Robert

openstack group add user --group-domain 210Demo --user-domain 210Demo Devops George

openstack group add user --group-domain 210Demo --user-domain 210Demo Devops William

openstack group add user --group-domain 210Demo --user-domain 210Demo Devops John

- 给组添加角色

openstack role add --group Devops --group-domain 210Demo --project

Engineering --project-domain 210Demo _member_

openstack role add --group Devops --group-domain 210Demo --project

Engineering --project-domain 210Demo admin

openstack role add --group Devops --group-domain 210Demo --project Production

--project-domain 210Demo _member_

openstack role add --group Devops --group-domain 210Demo --project Production

--project-domain 210Demo admin

镜像管理

比较镜像格式

- raw

raw格式是最简单,什么都没有,所以叫raw格式。连头文件都没有,就是一个直接给虚拟机进行读写的文件。raw不支持动态增长空间,必须一开始就指定空间大小。所以相当的耗费磁盘空间。但是对于支 持稀疏文件的文件系统(如ext4)而言,这方面并不突出。ext4下默认创建的文件就是稀疏文件,所以 不要做什么额外的工作。du -sh 文件名

用du可以查看文件的实际大小。也就是说,不管磁盘空间有多大,运行下面的指令没有任何问题:

raw镜像格式是虚拟机种I/O性能最好的一种格式,大家在使用时都会和raw进行参照,性能越接近raw 的越好。但是raw没有任何其他功能。对于稀疏文件的出现,像qcow这一类的运行时分配空间的镜像就 没有任何优势了。

- qcow

qcow在cow的基础上增加了动态增加文件大小的功能,并且支持加密,压缩。qcow通过2级索引表来管 理整个镜像的空间分配,其中第二级的索引用了内存cache技术,需要查找动作,这方面导致性能的损 失。qcow现在基本不用,一方面其优化和功能没有qcow2好,另一方面,读写性能又没有cow和raw 好。

- qcow2

qcow2是集各种技术为一体的超级镜像格式,支持内部快照,加密,压缩等一系列功能,访问性能也在

不断提高。但qcow2的问题就是过于臃肿,把什么功能都集于一身。

- 镜像文件管理

#创建镜像

[root@compute0 ~]# qemu-img create -f qcow2 disk01.qcow2 10G

[root@compute0 ~]# qemu-img create -f raw disk02.raw 10G

#查看镜像

[root@compute0 ~]# qemu-img info disk01.qcow2 image: disk01.qcow2

file format: qcow2

virtual size: 10G (10737418240 bytes)

disk size: 196K

cluster_size: 65536

Format specific information:

compat: 1.1

lazy refcounts: false

refcount bits: 16

corrupt: false

[root@compute0 ~]# qemu-img info disk02.raw

image: disk02.raw

file format: raw

virtual size: 10G (10737418240 bytes)

disk size: 0

[root@compute0 ~]# ll -h

-rw-r--r--. 1 root root 193K Jun 23 06:15 disk01.qcow2

-rw-r--r--. 1 root root 10G Jun 23 06:17 disk02.raw

#qcow2和raw格式之间进行转换

[root@compute0 ~]# qemu-img convert -f qcow2 -O raw disk01.qcow2 disk01.raw -rw-r--r--. 1 root root 10G Jun 23 06:22 disk01.raw

修改客户机和磁盘镜像

练习:guestfish自定义镜像

初始化环境

[student@workstation ~]$ lab customization-img-customizing setup

- 下载镜像

[student@workstation ~]$ wget http://materials.example.com/osp-small.qcow2 -O ~/finance-rhel-db.qcow2

- 编辑镜像

[student@workstation ~]$ guestfish -i --network -a ~/finance-rhel-db.qcow2 #选项说明: -i 自动挂载分区;--network 启用网络;-a 添加磁盘镜像

><fs> command "yum -y install httpd"

><fs> command "systemctl enable httpd"

><fs> command "systemctl start httpd"

><fs> touch /var/www/html/index.html

><fs> vi /var/www/html/index.html

...

><fs> command "useradd test"

><fs> selinux-relabel /etc/selinux/targeted/contexts/files/file_contexts /

><fs> exit

[student@workstation ~]$ source developer1-finance-rc

#上传镜像

[student@workstation ~(developer1-finance)]$ openstack image create --disk- format qcow2 --min-disk 10 --min-ram 2048 --file ~/finance-rhel-db.qcow2 finance-rhel-db

- 发放云主机

#发送云主机

[student@workstation ~(developer1-finance)]$ openstack server create -- flavor default --key-name example-keypair --nic net-id=finance-network1 -- security-group finance-db --image finance-rhel-db --wait finance-db1

#绑定浮动ip

[student@workstation ~(developer1-finance)]$ openstack floating ip list [student@workstation ~(developer1-finance)]$ openstack server add floating ip finance-db1 172.25.250.110

#登录云主机

[student@workstation ~(developer1-finance)]$ ssh cloud-user@172.25.250.110 Warning: Permanently added '172.25.250.110' (ECDSA) to the list of known hosts.

[cloud-user@finance-db1 ~]$

#在安全组规则中放行80端口

[student@workstation ~(developer1-finance)]$ curl 172.25.250.110

- 清除环境

[student@workstation ~]$ lab customization-img-customizing cleanup

部署期间初始化实例

cloud-init自定义创建的实例

- 初始化环境

[student@workstation ~]$ lab customization-img-cloudinit setup

- 下载镜像

[student@workstation ~]$ wget http://materials.example.com/osp-small.qcow2-O ~/cloud-init

- 编辑镜像

[student@workstation ~]$ source developer1-finance-rc

#上传镜像

[student@workstation ~]$ openstack image create --disk-format qcow2 --min- disk 10 --min-ram 2048 --file cloud-init cloud-init

#发送云主机

[student@workstation ~(developer1-finance)]$ vim user-data

#!/bin/bash

yum -y install httpd

systemctl enable httpd

systemctl start httpd

echo hello yutianedu > /var/www/html/index.html

[student@workstation ~(developer1-finance)]$ openstack server create -- flavor default --key-name example-keypair --nic net-id=finance-network1 -- security-group default --image cloud-init --user-data ~/user-data --wait cloud-init

#浮动ip

[student@workstation ~(developer1-finance)]$ openstack floating ip list

[student@workstation ~(developer1-finance)]$ openstack server add floating ip cloud-init 172.25.250.110

#登录云主机

[student@workstation ~(developer1-finance)]$ ssh cloud-user@172.25.250.110

[cloud-user@cloud-init ~]$ curl 127.0.0.1

#在安全组规则中放行80端口

[student@workstation ~(developer1-finance)]$ curl 172.25.250.110

- 清除环境

[student@workstation ~]$ lab customization-img-cloudinit cleanup

存储管理

实施块存储

- 查看ceph节点的osd信息

[root@ceph0 ~]# docker ps | grep osd

6b78187574a9 172.25.249.200:8787/rhceph/rhceph-3-rhel7:latest "/entrypoint.sh" 3 days ago Up 3 days ceph-osd-ceph0-vdc

de28874916e2 172.25.249.200:8787/rhceph/rhceph-3-rhel7:latest "/entrypoint.sh" 3 days ago Up 3 days ceph-osd-ceph0-vdb

9b3a4fdea982 172.25.249.200:8787/rhceph/rhceph-3-rhel7:latest "/entrypoint.sh" 3 days ago Up 3 days ceph-osd-ceph0-vdd

[root@ceph0 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sr0 11:0 1 738K 0 rom

vda 252:0 0 40G 0 disk

├─vda1 252:1 0 1M 0 part

└─vda2 252:2 0 40G 0 part /

vdb 252:16 0 20G 0 disk

├─vdb1 252:17 0 19G 0 part

└─vdb2 252:18 0 1G 0 part #日志区:用于高速缓存

vdc 252:32 0 20G 0 disk

├─vdc1 252:33 0 19G 0 part

└─vdc2 252:34 0 1G 0 part #日志区:用于高速缓存

vdd 252:48 0 20G 0 disk

├─vdd1 252:49 0 19G 0 part

└─vdd2 252:50 0 1G 0 part #日志区:用于高速缓存

- 查看控制节点运行的ceph角色

[root@controller0 ~]# docker ps | grep ceph

64939292eaef 172.25.249.200:8787/rhceph/rhceph-3-rhel7:latest "/entrypoint.sh" 3 days ago Up 3 days ceph-mds-controller0

c246986662f8 172.25.249.200:8787/rhceph/rhceph-3-rhel7:latest "/entrypoint.sh" 3 days ago Up 3 days ceph-mgr-controller0

5f5683c24ed9 172.25.249.200:8787/rhceph/rhceph-3-rhel7:latest "/entrypoint.sh" 3 days ago Up 3 days ceph-mon-controller0

- 查看存储池

[root@controller0 ~]# ceph osd pool ls

images # 给glance提供存储

metrics # Ceilometer使用

backups # nova使用,虚拟机备份

vms # nova使用,虚拟机临时存储

volumes # 给cinder提供块存储

manila_data # 共享文件系统

manila_metadata

- 查看镜像

[root@controller0 ~]# rbd -p images ls

14b7e8b2-7c6d-4bcf-b159-1e4e7582107c

5f7f8208-33b5-4f17-8297-588f938182c0

60422c8d-32d3-43ae-aa7d-ef9a39394045

6b0128a9-4481-4ceb-b34e-ffe92e0dcfdd

a54fdda9-ee60-4bbf-b14e-3f0f871003ec

ec9473de-4048-4ebb-b08a-a9be619477ac

[student@workstation ~(developer1-finance)]$ openstack image list

+--------------------------------------+-----------------+--------+

| ID | Name | Status |

+--------------------------------------+-----------------+--------+

| a54fdda9-ee60-4bbf-b14e-3f0f871003ec | cloud-init | active |

| ec9473de-4048-4ebb-b08a-a9be619477ac | octavia-amphora | active |

| 6b0128a9-4481-4ceb-b34e-ffe92e0dcfdd | rhel7 | active |

| 5f7f8208-33b5-4f17-8297-588f938182c0 | rhel7-db | active |

| 14b7e8b2-7c6d-4bcf-b159-1e4e7582107c | rhel7-web | active |

| 60422c8d-32d3-43ae-aa7d-ef9a39394045 | web-image | active |

+--------------------------------------+-----------------+--------+

- 查看虚拟机

[root@controller0 ~]# rbd -p vms ls

8c933b4f-7a61-4f51-8957-ed035bb7682f_disk

[student@workstation ~(developer1-finance)]$ openstack server list

+--------------------------------------+------------+--------+-----------------------------------------------+------------+---------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+------------+--------+-----------------------------------------------+------------+---------+

| 8c933b4f-7a61-4f51-8957-ed035bb7682f | cloud-init | ACTIVE | finance-network1=192.168.1.12, 172.25.250.110 | cloud-init | default |

+--------------------------------------+------------+--------+-----------------------------------------------+------------+---------+

- rados命令查看对象

[root@controller0 ~]# rados -p vms ls | head rbd_data.16bfc6b8b4567.0000000000000014 rbd_data.16bfc6b8b4567.0000000000000429 rbd_data.16bfc6b8b4567.0000000000000037 rbd_data.16bfc6b8b4567.0000000000000010 rbd_data.16bfc6b8b4567.000000000000023a rbd_data.16bfc6b8b4567.0000000000000628 rbd_data.16bfc6b8b4567.0000000000000051 rbd_data.16bfc6b8b4567.0000000000000615 rbd_data.16bfc6b8b4567.0000000000000042 rbd_data.16bfc6b8b4567.0000000000000245 ...省略...

- 服务都是通过openstack用户访问ceph存储,查看openstack用户权限

[root@controller0 ~]# ceph auth get client.openstack

[client.openstack]

key = AQAPHM9bAAAAABAA8DLv19H7QXzX0CnaTql/1w==

caps mds = ""

caps mgr = "allow *"

caps mon = "profile rbd"

caps osd = "profile rbd pool=volumes, profile rbd pool=backups, profile

rbd pool=vms, profile rbd pool=images, profile rbd pool=metrics"

exported keyring for client.openstack

- 在控制节点查看cinder对接ceph的配置

[root@compute0 ~]# vim /var/lib/config-data/puppet-

generated/cinder/etc/cinder/cinder.conf

[tripleo_ceph]

backend_host=hostgroup

volume_backend_name=tripleo_ceph

volume_driver=cinder.volume.drivers.rbd.RBDDriver

rbd_ceph_conf=/etc/ceph/ceph.conf

rbd_user=openstack

rbd_pool=volumes

- 在控制节点查看glance对接ceph的配置

[root@controller0 ~]# vim /var/lib/config-data/puppet-

generated/glance_api/etc/glance/glance-api.conf

[glance_store]

stores=http,rbd

default_store=rbd

rbd_store_pool=images

rbd_store_user=openstack

rbd_store_ceph_conf=/etc/ceph/ceph.conf

os_region_name=regionOne

- 在计算节点查看nova对接ceph的配置

[root@compute0 ~]# vim /var/lib/config-data/puppet-

generated/nova_libvirt/etc/nova/nova.conf

[libvirt]

live_migration_uri=qemu+ssh://nova_migration@%s:2022/system?

keyfile=/etc/nova/migration/identity

rbd_user=openstack

rbd_secret_uuid=fe8e3db0-d6c3-11e8-a76d-52540001fac8

images_type=rbd

images_rbd_pool=vms

images_rbd_ceph_conf=/etc/ceph/ceph.conf

virt_type=kvm

cpu_mode=none

inject_password=False

inject_key=False

inject_partition=-2

hw_disk_discard=unmap

enabled_perf_events=

disk_cachemodes=network=writeback

#libvirt与ceph对接组要密钥 [root@compute0 ~]# virsh secret-list

UUID Usage

-------------------------------------------------------------------------------

fe8e3db0-d6c3-11e8-a76d-52540001fac8 ceph client.openstack secret

练习:管理cinder块存储

准备环境

[student@workstation ~]$ lab storage-backend setup

- 查看ceph集群状态

[student@workstation ~]$ ssh root@controller0

[root@controller0 ~]# docker ps | grep ceph

64939292eaef 172.25.249.200:8787/rhceph/rhceph-3-rhel7:latest "/entrypoint.sh" 6 days ago Up 6 days ceph-mds-controller0

c246986662f8 172.25.249.200:8787/rhceph/rhceph-3-rhel7:latest "/entrypoint.sh" 6 days ago Up 6 days ceph-mgr-controller0

5f5683c24ed9 172.25.249.200:8787/rhceph/rhceph-3-rhel7:latest "/entrypoint.sh" 6 days ago Up 6 days ceph-mon-controller0

[root@controller0 ~]# ceph -s

[root@controller0 ~]# docker exec -it glance_api grep -iE 'rbd|ceph'

/etc/glance/glance-api.conf | grep -v ^# | grep -v ^$

stores=http,rbd

default_store=rbd

rbd_store_pool=images

rbd_store_user=openstack

rbd_store_ceph_conf=/etc/ceph/ceph.conf

[root@controller0 ~]# docker exec -it cinder_api grep -Ei 'rbd|ceph'

/etc/cinder/cinder.conf | grep -v ^#

enabled_backends=tripleo_ceph

[tripleo_ceph]

volume_backend_name=tripleo_ceph

volume_driver=cinder.volume.drivers.rbd.RBDDriver

rbd_ceph_conf=/etc/ceph/ceph.conf

rbd_user=openstack

rbd_pool=volumes

[root@controller0 ~]# ceph osd pool ls

images

metrics

backups

vms

volumes

manila_data

manila_metadata

[root@controller0 ~]# exit

logout

- 上传镜像到ceph存储

[student@workstation ~]$ source developer1-finance-rc

[student@workstation ~(developer1-finance)]$

[student@workstation ~(developer1-finance)]$ wget

http://materials.example.com/osp-small.qcow2

[student@workstation ~(developer1-finance)]$ openstack image create --disk-

format qcow2 --file ~/osp-small.qcow2 finance-image

[student@workstation ~(developer1-finance)]$ openstack image list

[root@controller0 ~]# rados -p image ls | grep 5ab04c59-d77d-4aa0-89b4-

110c740f0e34

- 创建云主机和云硬盘,并将云硬盘挂载给云主机

[student@workstation ~(developer1-finance)]$ openstack server create --flavor default --image finance-image --nic net-id=finance-network1 --security-group default finance-server1

[student@workstation ~(developer1-finance)]$ openstack server list

[student@workstation ~(developer1-finance)]$ openstack volume create --size 1 finance-volume1

[student@workstation ~(developer1-finance)]$ openstack volume list

[student@workstation ~(developer1-finance)]$ openstack server add volume finance-server1 finance-volume1

- 查看块存储

[student@workstation ~(developer1-finance)]$ openstack volume list

[root@controller0 ~]# rados -p volumes ls

rbd_directory

rbd_info

rbd_id.volume-5b663dd9-4bb8-43b5-ab67-663ee9b9b31d

rbd_object_map.238f371f6b82b

rbd_header.238f371f6b82b

- 清除环境

# [student@workstation ~]$ lab storage-backend cleanup

比较块存储

swift命令:

老版本OpenStack 中的swift post、swift list和 swift stat等命令仍然支持 新版本 OpenStack 中,使用 OpenStack 统一命令行工具管理

$ openstack container create <container_name>:创建容器

$ openstack container list:列出所有可用的容器

$ openstack container delete <container_name>:删除指定的容器

$ openstack container save <container_name>:下载/保存指定容器中的内容至本地

$ openstack object create <container_name> <object_name>:上传已存在的对象至指定容 器中

$ openstack object list <container_name>:列出指定容器中的所有对象

$ openstack object delete <container_name> <object_name>:删除指定容器中的对象

manila共享文件系统

- 以管理员用户身份创建共享类型

$ manila type-create cephfs type false

说明:

false参数为driver_handles_share_servers参数的值,若开启动态扩展共享节点,该参数为true以租户用户身份创建共享

$ manila create --name demo -share--share-type cephfstype cephfs 1

- 说明:

--share-type选项基于后端存储对共享进行分类,并且该共享大小为1GB。 当共享可用时,启动虚拟机实例,单独连接 tenant 网络和存储网络(租户网络与存储网络需要隔离)

在 Ceph Monitor 节点上以 client.manila 用户进行身份认证来创建 cephx 用户,保存该用户的 secret key

- 追踪共享的导出路径

$ manila share-export-location-list demo-share --columns Path

- 练习:管理manila共享文件系统

初始化环境

[student@workstation ~]$ lab storage-manila setup

- 登录控制节点,确保manila服务运行

[student@workstation ~]$ ssh root@controller0

[root@controller0 ~]# docker ps | grep manila

9d8ff8477178 172.25.249.200:8787/rhosp13/openstack-manila-share:pcmklatest "/bin/bash /usr/lo..." 5 days ago Up 5 openstack-manila-share-docker-0

f47af1e5d0c0 172.25.249.200:8787/rhosp13/openstack-manila-api:latest "kolla_start" 19 months ago Up 6 days manila_api

d601bacac004 172.25.249.200:8787/rhosp13/openstack-manila-scheduler:latest "kolla_start" 19 months ago Up 6days (healthy) manila_scheduler

- 查看manila对接ceph的配置

[root@controller0 manila]# /var/lib/config-data/puppet-

generated/manila/etc/manila/manila.conf

enabled_share_backends=cephfs

enabled_share_protocols=CEPHFS

[cephfs]

driver_handles_share_servers=False

share_backend_name=cephfs

share_driver=manila.share.drivers.cephfs.driver.CephFSDriver

cephfs_conf_path=/etc/ceph/ceph.conf

cephfs_auth_id=manila

cephfs_cluster_name=ceph

cephfs_enable_snapshots=False

cephfs_protocol_helper_type=CEPHFS

[root@controller0 manila]# exit

- 创建manila共享文件系统,并发放云主机

[student@workstation ~]$ source ~/architect1-finance-rc

[student@workstation ~(architect1-finance)]$ manila service-list

+----+------------------+------------------+------+---------+-------+-----------------------------+

| Id |Binary |Host |Zone |Status |State | Updated_at |

+----+------------------+------------------+------+---------+-------+-----------------------------+

| 1 | manila-scheduler | hostgroup | nova | enabled | up | 2020- 06-11T15:41:11.000000 |

| 2 | manila-share | hostgroup@cephfs | nova | enabled | up | 2020- 06-11T15:41:07.000000 |

+----+------------------+------------------+------+---------+-------+-----------------------------+

[student@workstation ~(architect1-finance)]$ manila type-create cephfstype

false

[student@workstation ~(architect1-finance)]$ source developer1-finance-rc

[student@workstation ~(developer1-finance)]$ manila create --name finance-

share1 --share-type cephfstype cephfs 1

[student@workstation ~(developer1-finance)]$ manila list

[student@workstation ~(developer1-finance)]$ openstack server create --flavor default --image rhel7 --key-name example-keypair --nic net-

id=finance-network1 --nic net-id=provider-storage --user-data /home/student/manila/user-data.file finance-server1 --wait

#cloud-init实现

[student@workstation ~(architect1-finance)]$ cat manila/user-data.file #!/bin/bash

cat > /etc/sysconfig/network-scripts/ifcfg-eth1 << eof

DEVICE=eth1

ONBOOT=yes

BOOTPROTO=dhcp

eof

ifup eth1

#给云主机绑定浮动ip

[student@workstation ~(developer1-finance)]$ openstack floating ip list

- 用manila用户在ceph中创建cloud-user用户,用于访问ceph存储

[root@foundation0 ~]# ssh root@controller0

[root@controller0 ~]# ceph auth get-or-create client.cloud-user --

name=client.manila --keyring=/etc/ceph/ceph.client.manila.keyring >

/root/cloud-user.keyring

- 复制ceph配置文件和cloud-user的密钥到云主机中

[root@controller0 ~]# scp cloud-user.keyring /etc/ceph/ceph.conf student@workstation:~

[root@controller0 ~]# exit

[student@workstation ~(developer1-finance)]$ scp ceph.conf cloud-user.keyring cloud-user@172.25.250.104:~

- 设置cloud-user用户可以访问manila共享文件系统

[student@workstation ~(developer1-finance)]$ manila access-allow finance-share1 cephx cloud-user

- 查看manila共享文件系统

[student@workstation ~(developer1-finance)]$ manila share-export-location-list finance-share1

+--------------------------------------+------------------------------------------------------------------------+-----------+

| ID | Path | Preferred |

+--------------------------------------+------------------------------------------------------------------------+-----------+

| 56e9acde-8203-49c4-ade7-87dfdac6afb6 | 172.24.3.1:6789:/volumes/_nogroup/4e88a689-8f43-452f-a08c-87d35b34e6c7 | False |

+--------------------------------------+------------------------------------------------------------------------+-----------+

- 登录云主机,安装驱动程序,挂载manila服务

[student@workstation ~(developer1-finance)]$ ssh cloud-user@172.25.250.104

[cloud-user@finance-server1 ~]$ su -

Password: redhat

[root@finance-server1 ~]# mkdir /mnt/ceph

[root@finance-server1 ~]# curl -s -f -o /etc/yum.repos.d/ceph.repo http://materials.example.com/ceph.repo

[root@finance-server1 ~]# yum -y install ceph-fuse

[root@finance-server1 ~]# ceph-fuse /mnt/ceph/ --id=cloud-user --conf=/home/cloud-user/ceph.conf --keyring=/home/cloud-user/cloud-user.keyring --client-mountpoint=/volumes/_nogroup/4e88a689-8f43-452f-a08c-87d35b34e6c7

[root@finance-server1 ~]# df -h /mnt/ceph

Filesystem Size Used Avail Use% Mounted on

ceph-fuse 1.0G 0 1.0G 0% /mnt/ceph

[root@finance-server1 ~]# echo hello > /mnt/ceph/hello.txt

- 清空环境

[student@workstation ~(developer1-finance)]$ openstack server delete finance-server1

[student@workstation ~(developer1-finance)]$ manila delete finance-share1

[student@workstation ~(developer1-finance)]$ source architect1-finance-rc

[student@workstation ~(architect1-finance)]$ manila type-delete cephfstype

[student@workstation ~(architect1-finance)]$ lab storage-manila cleanup

管理临时存储

临时与持久存储的区别

- 当租户用户使用镜像创建实例时,该实例具有的唯一的初始存储是临时存储(根磁盘) 该临时存储是一种非常简单且易用的存储资源,但缺乏存储分层(tiering)和磁盘容量扩展等高级 功能

- 存储在临时存储中的任何数据在实例终止后无法保留

- 临时存储的生命周期由 OpenStack Nova 计算服务管理 持久存储在其附加的实例终止之后依然存在

- 持久存储由 OpenStack Cinder、Swift 与 Manila 管理

临时存储与 Libvirt:

- Nova 计算服务使用 KVM 作为 Hypervisor 的原生 libvirt 虚拟化平台

- 实例作为 KVM 虚拟机存在,libvirtd 服务根据用户的指令对虚拟机执行操作

- flavor 决定每个实例的大小

- Nova 允许实例消耗来自 hypervisor 系统的计算和存储资源

- flavor 属性还包括 vCPU数量、内存量、交换内存量、实例的磁盘大小,以及实例的额外临时磁盘 大小

- 作为 flavor 定义的一部分而创建的存储单元本质上都是临时存储 默认情况下,计算服务在计算节点上的 /var/lib/nova/instances/UUID/ 目录中创建代表临时存储 文件

- 若 Ceph 用作 Nova 的后端存储,则后端对象存储在通常名为 vms 的 pool 中

- 请注意,将 Ceph 存储用作 OpenStack 计算服务的后端存储并不使临时存储转换为持久存储!

- 临时存储仍然会在实例删除时消失,无论它位于计算节点本地,还是来自 Ceph等外部后端存储

- 创建持久根磁盘(Root Disks):

- 实例镜像的内容可以提取到持久卷 通过任何实例对根文件系统所做的任何更改都将一直存在,直到删除持久卷为止

$ openstack volume create --size <volume_size> --image <image_name> <volume_name>例:$ openstack volume create --size 10 --image rhel7 demo-vol- 创建名称为 demo-vol 的 volume,大小10GB,并将 rhel7 镜像根文件系统注入该 volume 中;使 用 openstack server create 创建实例时,—volume 选项指定 volume 来启动实例

练习:管理临时存储

准备环境

[student@workstation ~]$ lab storage-compare setup

- 创建云主机

[student@workstation ~]$ source architect1-finance-rc

[student@workstation ~(architect1-finance)]$ openstack server create --flavor default --image rhel7 --nic net-id=finance-network1 --availability-zone nova:compute1.overcloud.example.com finance-server1

[student@workstation ~(architect1-finance)]$ openstack server list

[root@foundation0 ~]# ssh root@controller0

Last login: Sat Jun 13 09:44:27 2020 from 172.25.250.250

[root@controller0 ~]# rados -p vms ls | grep 83c36a8f-373f-41fc-8d43-c970ce642856

rbd_id.83c36a8f-373f-41fc-8d43-c970ce642856_disk

- 删除云主机后,临时存储也被删除

[student@workstation ~(architect1-finance)]$ openstack server delete finance-

server1

[student@workstation ~(architect1-finance)]$ exit

logout

Connection to workstation closed.

[root@foundation0 ~]# ssh root@controller0

Last login: Sat Jun 13 09:45:12 2020 from 172.25.250.250

[root@controller0 ~]# rados -p vms ls | grep 83c36a8f-373f-41fc-8d43-c970ce642856

- 设置临时存储为计算节点的本地空间

[student@workstation ~]$ ssh root@compute1

[root@compute1 ~]# vim /var/lib/config-data/puppet-

generated/nova_libvirt/etc/nova/nova.conf

[libvirt]

#images_type=rbd

[root@compute1 ~]# docker restart nova_compute

[root@compute1 ~]# exit

[student@workstation ~]$ source architect1-finance-rc

[student@workstation ~(architect1-finance)]$ openstack server create --flavor default --image rhel7 --nic net-id=finance-network1 --availability-zone nova:compute1.overcloud.example.com finance-server1 [student@workstation ~(architect1-finance)]$ openstack server list [student@workstation ~(architect1-finance)]$ ssh root@compute1 #查看云主机的根磁盘已经存放在计算节点本地

[root@compute1 ~]# ls /var/lib/nova/instances/

900d500f-0d1e-4c37-aec4-b4e822701c88 _base compute_nodes locks

[root@compute1 ~]# ls /var/lib/nova/instances/900d500f-0d1e-4c37-aec4-b4e822701c88/console.log disk disk.info

#删除云主机,磁盘文件也被删除

[student@workstation ~(architect1-finance)]$ ssh root@compute1

[root@compute1 ~]# ls /var/lib/nova/instances/

_base compute_nodes locks

- 练习:创建永久存储作为云主机的根磁盘

#将临时存储改为ceph作为后端存储

[student@workstation ~]$ ssh root@compute1

[root@compute1 ~]# vim /var/lib/config-data/puppet-generated/nova_libvirtetc/nova/nova.conf

[libvirt]

images_type=rbd

[root@compute1 ~]# docker restart nova_compute

#将镜像导入到云硬盘中

[student@workstation ~(architect1-finance)]$ openstack volume create --size 10 --image rhel7 finance-vol1

[student@workstation ~(architect1-finance)]$ openstack volume list [student@workstation ~(architect1-finance)]$ ssh root@controller0 [root@controller0 ~]# rados -p volumes ls | grep 5f53c9c2-ad60-4595-b0a1-1bfc5941e705

rbd_id.volume-5f53c9c2-ad60-4595-b0a1-1bfc5941e705

[root@controller0 ~]# exit

#创建虚拟机并使用块存储用作系统盘

[student@workstation ~(architect1-finance)]$ openstack server create --flavor default --volume finance-vol1 --key-name example-keypair --nic net-id=finance-network1 finance-server2 --wait

[student@workstation ~(architect1-finance)]$ openstack floating ip list [student@workstation ~(architect1-finance)]$ openstack server add floating ip finance-server2 172.25.250.108

[student@workstation ~(architect1-finance)]$ ssh 172.25.250.108 [cloud-user@finance2 ~]$ sudo -i

[root@finance2 ~]# echo hello > /test

[root@finance2 ~]# sync

[root@finance2 ~]# exit

[cloud-user@finance2 ~]$ exit

#删除云主机,查看卷还在

[student@workstation ~(architect1-finance)]$ openstack server delete finance-server2

[root@controller0 ~]# rados -p volumes ls | grep 5f53c9c2-ad60-4595-b0a1- 1bfc5941e705

rbd_id.volume-5f53c9c2-ad60-4595-b0a1-1bfc5941e705

- 使用块存储启动虚拟机

[student@workstation ~(architect1-finance)]$ openstack server create --flavor default --volume finance-vol1 --key-name example-keypair --nic net-id=finance-network1 finance-server2 --wait

[student@workstation ~(architect1-finance)]$ openstack server add floating ip finance-server2 172.25.250.108

[student@workstation ~(architect1-finance)]$ ssh 172.25.250.108

[cloud-user@finance-server2 ~]$ cat /test

hello

[cloud-user@finance2 ~]$ exit

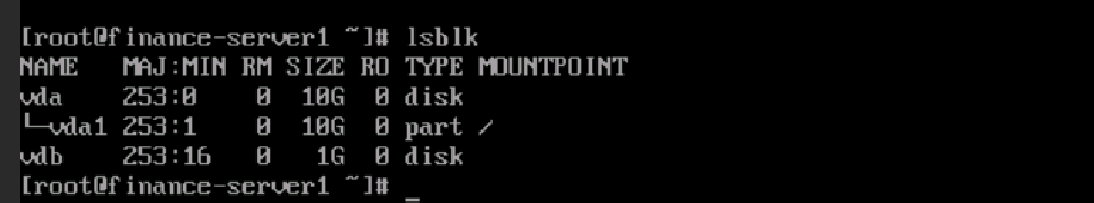

- 为云主机附加卷

[student@workstation ~(architect1-finance)]$ openstack volume create --size 1 finance-vol2

[student@workstation ~(architect1-finance)]$ openstack server add volume finance-server2 finance-vol2

[student@workstation ~(architect1-finance)]$ ssh 172.25.250.108 lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

vda 253:0 0 10G 0 disk

└─vda1 253:1 0 10G 0 part /

vdb 253:16 0 1G 0 disk

[student@workstation ~(architect1-finance)]$ openstack volume list

- 清除环境

[student@workstation ~(architect1-finance)]$ openstack server delete finance- server2

[student@workstation ~(architect1-finance)]$ openstack volume delete finance- vol1 finance-vol2

[student@workstation ~(architect1-finance)]$ lab storage-compare cleanup

管理openstack网络

描述网络协议类型

- OpenStack 网络服务是 SDN 网络项目,在虚拟环境中提供网络即服务(Networking-as-a- Service, NaaS)

- 它实施传统的网络功能,如子网、桥接、VLANs以及开放虚拟网络(Open Virtual Network, OVN)等新技术

- OVN 是 RHOSP 的默认 SDN ,由openvswitch社区提出

Geneve 简介:

- 创建 VLAN 以分段网络流量,12位的 VLAN ID 限制了虚拟网段在4000个左右

- VXLAN(虚拟扩展局域网)解决了VLAN的局限性,24位标头确保更多的虚拟网络数量

- VXLAN 不要求改变任何设备的基础架构,但它不兼容其他网络解决方案,如 STT 与 NVGRE

- Geneve(Generic Network Virtualization Encapsulation, 通用网络虚拟化封装)通过支持 VXLAN、NVGRE 与 STT 的所有功能来解决这些限制

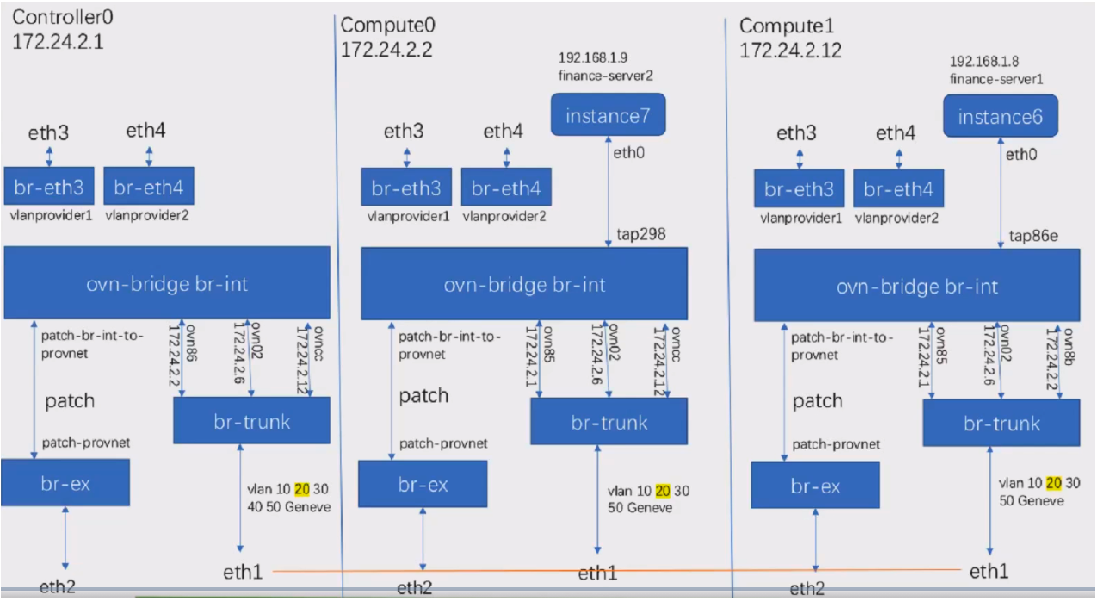

osp13网络架构图

练习:云主机网络

- 准备环境

[student@workstation ~]$lab networking-protocols setup

- 查看虚拟化节点信息

[student@workstation ~(architect1-finance)]$ openstack hypervisor list

+----+-----------------------------------+-----------------+-------------+-------+

| ID | HypervisorHostname | HypervisorType | HostIP | State |

+----+-----------------------------------+-----------------+-------------+-------+

| 1 | compute1.overcloud.example.com | QEMU | 172.24.1.12 | up |

| 2 | computehci0.overcloud.example.com | QEMU | 172.24.1.6 | up |

| 3 | compute0.overcloud.example.com | QEMU | 172.24.1.2 | up |

+----+-----------------------------------+-----------------+-------------+-------+

- 创建两个虚拟机

[student@workstation ~(architect1-finance)]$ openstack server create --flavor default --image rhel7 --nic net-id=finance-network1 --availability-zone nova:compute0.overcloud.example.com finance-server3

[student@workstation ~(architect1-finance)]$ openstack server create --flavor default --image rhel7 --nic net-id=finance-network1 --availability-zone nova:compute1.overcloud.example.com finance-server4

[student@workstation ~(architect1-finance)]$ openstack server list

+--------------------------------------+-----------------+--------+-------------------------------+-------+---------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+-----------------+--------+-------------------------------+-------+---------+

| 51d9116b-58b1-4d8a-9b29-0bfc5386ffce | finance-server4 | ACTIVE | finance-network1=192.168.1.12 | rhel7 | default |

| c175a41e-7434-403d-a3e5-6aa47aecab79 | finance-server3 | ACTIVE | finance-network1=192.168.1.4 | rhel7 | default |

+--------------------------------------+-----------------+--------+-------------------------------+-------+---------+

- 查看虚拟机配置文件

[root@compute0 ~]# virsh list --all

Id Name State

----------------------------------------------------

1 instance-0000000b running

[root@compute0 ~]# virsh dumpxml instance-0000000b | grep tap

<target dev='tap6dae0329-5d'/>

- 查看网桥配置信息

[root@compute0 ~]# ovs-vsctl show

d2399352-a3b5-4559-9868-ec8f97fdf2f5

Bridge br-trunk

fail_mode: standalone

Port "vlan10"

tag: 10

Interface "vlan10"

type: internal

Port "vlan50"

tag: 50

Interface "vlan50"

type: internal

Port "eth1"

Interface "eth1"

Port "vlan20"

tag: 20

Interface "vlan20"

type: internal

Port "vlan30"

tag: 30

Interface "vlan30"

type: internal

Port br-trunk

Interface br-trunk

type: internal

Bridge br-int

fail_mode: secure

Port "patch-br-int-to-provnet-fc5472ee-98d9-4f6b-9bc9-544ca18aefb3"

Interface "patch-br-int-to-provnet-fc5472ee-98d9-4f6b-9bc9-544ca18aefb3"

type: patch

options: {peer="patch-provnet-fc5472ee-98d9-4f6b-9bc9-544ca18aefb3-to-br-int"}

Port "ovn-85c877-0"

Interface "ovn-85c877-0"

type: geneve

options: {csum="true", key=flow, remote_ip="172.24.2.1"}

Port "tap6dae0329-5d"

Interface "tap6dae0329-5d"

Port br-int

Interface br-int

type: internal

Port "ovn-028ad0-0"

Interface "ovn-028ad0-0"

type: geneve

options: {csum="true", key=flow, remote_ip="172.24.2.6"}

Port "ovn-cc9fe3-0"

Interface "ovn-cc9fe3-0"

type: geneve

options: {csum="true", key=flow, remote_ip="172.24.2.12"}

Port "tapb356e2b4-60"

Interface "tapb356e2b4-60"

Bridge br-ex

fail_mode: standalone

Port "eth2"

Interface "eth2"

Port br-ex

Interface br-ex

type: internal

Port "patch-provnet-fc5472ee-98d9-4f6b-9bc9-544ca18aefb3-to-br-int"

Interface "patch-provnet-fc5472ee-98d9-4f6b-9bc9-544ca18aefb3-to-br-int"

type: patch

options: {peer="patch-br-int-to-provnet-fc5472ee-98d9-4f6b-9bc9-544ca18aefb3"}

ovs_version: "2.9.0"

#列出所有网桥上的端口

[root@compute0 ~]# ovs-vsctl list-ports br-int

ovn-028ad0-0

ovn-85c877-0

ovn-cc9fe3-0 patch-br-int-to-provnet-fc5472ee-98d9-4f6b-9bc9-544ca18aefb3 tap6dae0329-5d

tapb356e2b4-60

OpenStack网络相关命令:

$ openstack hypervisor list:查看 OpenStack 中的 hypervisor(计算节点)

$ openstack network list [--internal|--external]:查看指定项目中的内部或外部网络 $ openstack subnet list:查看全部子网信息

$ openstack port list --network :查看指定 内部网络中的端口信息

$ openstack router list:查看指定项目中的OVN逻辑路由器

$ openstack security group list:查看指定项目中的安全组规则

$ openstack security group rule list --long -f [json|yaml]:查看指定安全组规则 的详细信息

介绍开放虚拟网络(OVN)

OVN 架构:

- 北向数据库

- OpenStack 网络使用 OVN ML2 插件(OVN ML2 Plug-in, 即neutron plug-in)

- OVN ML2 插件运行在控制节点上,监听 TCP 6641 端口

- OVN 北向数据库(OVN Northbound Database)存储从 OVNML2 插件获得的 OVN 逻辑网络配置(logical networkconfiguration)

- OVN 北向服务(OVN Northbound Service)从 OVN 北向数据库中转换逻辑网络配置到逻辑数据 流中(logical flows)ovn-northd 将逻辑数据流注入并存储于 OVN Southbound Database 中 ovn-northd 服务运行在控制节点上

- 南向数据库

- OVN 南向数据库(OVN Southbound Database)监听 TCP6642 端口

- 每个 hypervisor 具有其自身的 ovn-controller

- OVN 使用逻辑流创建整个网络,逻辑流被分布到每个 hypervisor 节点的 ovn-controller 中ovn- controller 将逻辑流转译成 OpenFlow 流

查看控制节点的neutron组件

[root@controller0 ~]# docker ps | egrep 'ovn|neutron'

3bd8879fd949 172.25.249.200:8787/rhosp13/openstack-ovn-northd:latest "/bin/bash /usr/lo..." 47 minutes ago Up 47 minutes ovn-dbs-bundle-docker-0

f15bb0beb920 172.25.249.200:8787/rhosp13/openstack-ovn-controller:latest "kolla_start" 20 months ago Up 49 minutes ovn_controller

11f5ed821071 172.25.249.200:8787/rhosp13/openstack-neutron-server-ovn:latest "kolla_start" 20 months ago Up 49 minutes (healthy) neutron_api

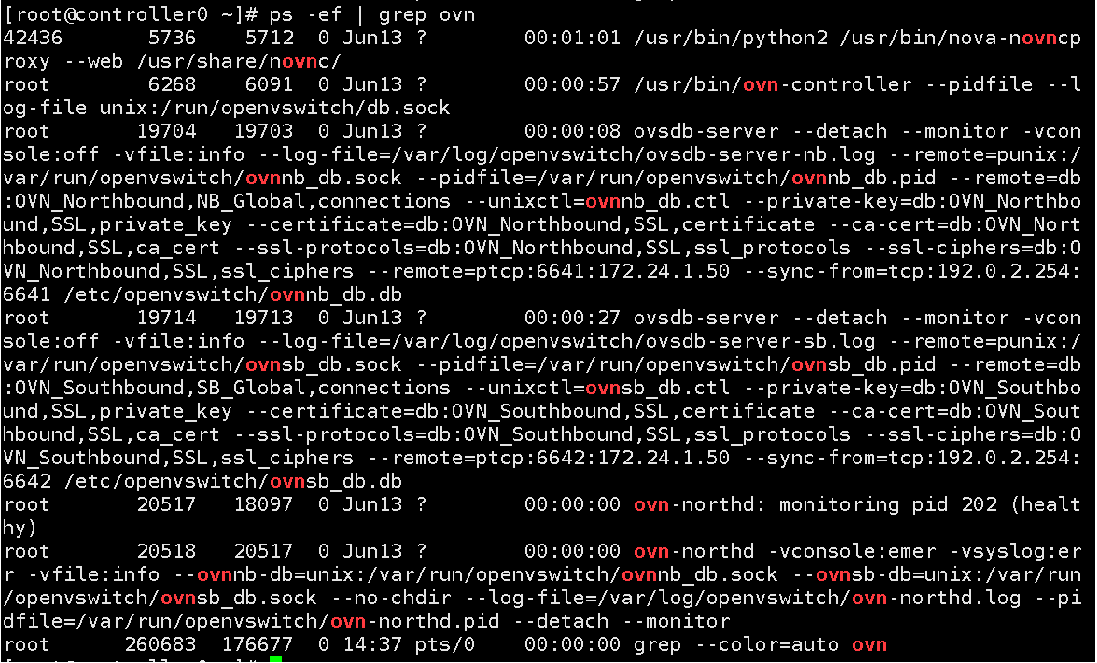

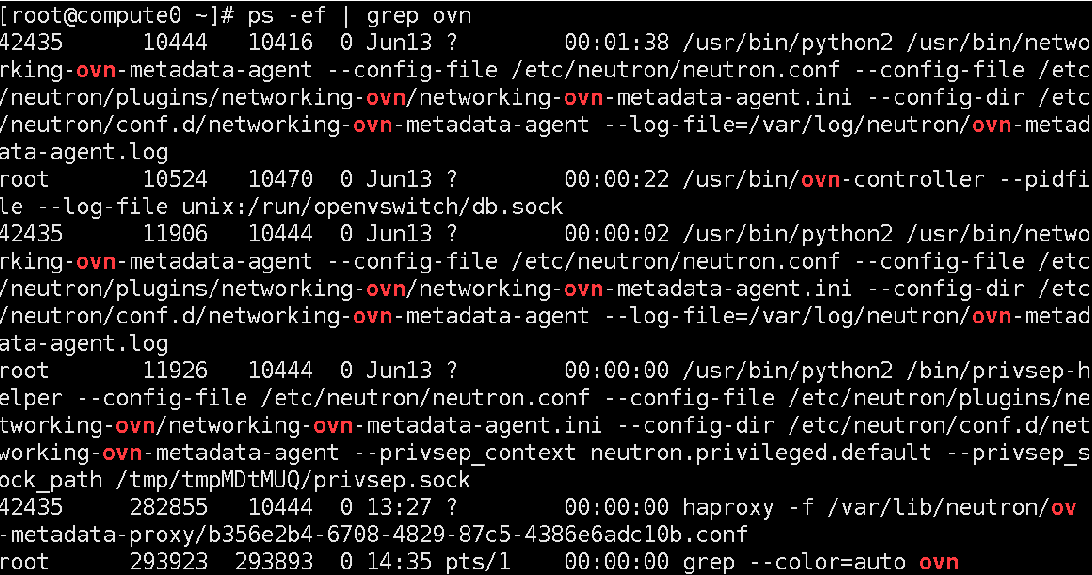

控制节点查看ovn运行的服务

[root@controller0 ~]# ps-ef | grep ovn

计算节点查看ovn运行的服务

[root@compute0 ~]# ps-ef | grep ovn

服务networking-ovn-metadata-agent.ini是cloud-init从此服务上获取ip地址和主机名等信息

查看所有open port和ovndb的数据库地址

[root@controller0 ~]# ovs-vsctl list open

_uuid : 6c237e8b-5014-42f1-9ac4-cc7728797597

bridges : [1d420f80-91e3-44ad-85ff-b311d90a7538, b1eb4d87-1b0b-

4541-aca6-e9adefbe8172, b2474c7c-c50c-4a22-8826-f1f9233bf5ab, eb5acfd9-bc1c-

444e-9045-8d48aa6d01c5, efed7252-c6fe-487c-a1bc-0b4e6ee9a6d3]

cur_cfg : 46

datapath_types : [netdev, system]

db_version : "7.15.1"

external_ids : {hostname="controller0.overcloud.example.com", ovn-bridge=br-int, ovn-bridge-mappings="datacentre:br-ex,vlanprovider1:br-eth3,vlanprovider2:br-eth4,storage:br-trunk", ovn-cms-options=enable-chassis-as-gw, ovn-encap-ip="172.24.2.1", ovn-encap-type=geneve, ovn-remote="tcp:172.24.1.50:6642", rundir="/var/run/openvswitch", system-id="85c87734-e866-4225-b305-471357c68b8a"}

iface_types : [geneve, gre, internal, lisp, patch, stt, system, tap, vxlan]

manager_options : []

next_cfg : 46

other_config : {}

ovs_version : "2.9.0"

ssl : []

statistics : {}

system_type : rhel

system_version : "7.5"

#172.24.1.50:6642是南向数据库的地址

关联环境变量

[root@controller0 ~]# export OVN_SB_DB=tcp:172.24.1.50:6642 #南向数据库存放流表 信息

[root@controller0 ~]# export OVN_NB_DB=tcp:172.24.1.50:6641 #北向数据库定义网络 架构

在北向网络中查看ovn架构信息

#查看所有的switch和router的架构拓扑信息

[root@controller0 ~]# ovn-nbctl show

switch 153db687-27fe-4f90-a3f0-2958c373dcc2 (neutron-7a6556ab-6083-403e-acfc-79caf3873660) (aka finance-network1)

port b712e3d4-7b98-4c82-b6bd-e3a728af533f

type: router

router-port: lrp-b712e3d4-7b98-4c82-b6bd-e3a728af533f #路由器端的port port 30509675-60b6-4add-9deb-271dae1a9b0c #finance-server4的port

addresses: ["fa:16:3e:72:1c:08 192.168.1.8"]

port 42671818-08d4-4a99-bd80-99a452ee40b3 #交换机连接路由器的port

type: localport

addresses: ["fa:16:3e:e3:12:fd 192.168.1.2"]

port 6dae0329-5d49-49b2-98b0-f164cc1fe66e #finance-server3的port

addresses: ["fa:16:3e:f3:fb:49 192.168.1.10"]

switch f0f71887-2544-4f29-b46c-04b0aa0b2e52 (neutron-fc5472ee-98d9-4f6b-

9bc9-544ca18aefb3) (aka provider-datacentre)

port provnet-fc5472ee-98d9-4f6b-9bc9-544ca18aefb3

type: localnet

addresses: ["unknown"]

port 3faa6084-1840-427a-8a5b-effebdf66094

type: router

router-port: lrp-3faa6084-1840-427a-8a5b-effebdf66094

port d3a97fff-95de-447c-a962-01b43e0299de

type: localport

addresses: ["fa:16:3e:dc:ab:47"]

router 7e576467-0daf-4e5d-955a-a867d7369db8 (neutron-70c05d72-87e0-4318-

aea1-966d850465cc) (aka finance-router1)

port lrp-3faa6084-1840-427a-8a5b-effebdf66094 #连接外网的port mac: "fa:16:3e:ae:ee:03"

networks: ["172.25.250.110/24"] #外网的网关

gateway chassis: [85c87734-e866-4225-b305-471357c68b8a]

port lrp-b712e3d4-7b98-4c82-b6bd-e3a728af533f #连接finnance-network1的 port

mac: "fa:16:3e:b2:83:ba"

networks: ["192.168.1.1/24"] #内网的网关 nat bf5d6985-4063-4966-a971-c69e07d77145

external ip: "172.25.250.110"

logical ip: "192.168.1.0/24"

type: "snat"

#查看所有网络的id

[root@controller0 ~]# ovn-nbctl ls-list

153db687-27fe-4f90-a3f0-2958c373dcc2 (neutron-7a6556ab-6083-403e-acfc- 79caf3873660)

aca840be-670a-4eb3-9b36-4246c0eabb6c (neutron-9838d8ed-3e64-4196-87f0- a4bc59059be9)

b2cc3860-13f9-4eeb-b328-10dbc1f1b131 (neutron-d55f6d1e-c29e-4825-8de4- 01dd95f8a220)

c5b32043-cd23-41ac-9197-ea41917870bb (neutron-e14d713e-c1f5-4800-8543- 713563d7e82e)

f0f71887-2544-4f29-b46c-04b0aa0b2e52 (neutron-fc5472ee-98d9-4f6b-9bc9- 544ca18aefb3)

在南向网络中查看ovn架构信息

[root@controller0 ~]# ovn-sbctl show

Chassis "8b62a309-b80d-49dc-8ce8-5b000ca2ce83"

hostname: "compute0.overcloud.example.com"

Encap geneve

ip: "172.24.2.2"

options: {csum="true"}

Port_Binding "cdb69a0f-0ec3-4178-baec-dd9bc0f19f3d" #ovn逻辑交换机port端口

Chassis "028ad0fe-55b6-4c8d-b3d4-03e749d6448e"

hostname: "computehci0.overcloud.example.com"

Encap geneve

ip: "172.24.2.6"

options: {csum="true"}

Chassis "85c87734-e866-4225-b305-471357c68b8a"

hostname: "controller0.overcloud.example.com"

Encap geneve

ip: "172.24.2.1"

options: {csum="true"}

Port_Binding "cr-lrp-091be0a2-bf36-4d93-bbaf-6c899a7af1ff"

Port_Binding "b5d7e4a5-4bfa-4207-aa54-d8c0e0ff7168"

Chassis "cc9fe322-d941-4fff-bd77-7490bc8687a3"

hostname: "compute1.overcloud.example.com"

Encap geneve

ip: "172.24.2.12"

options: {csum="true"}

Port_Binding "8a59b346-739b-4696-b058-be5b8e789587"

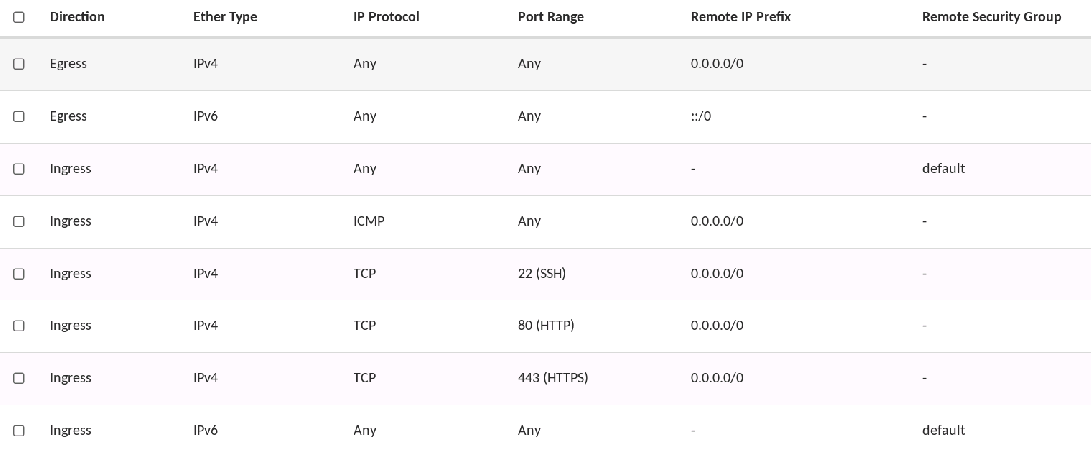

查看安全组规则实现

#查看网络中虚拟机的防火墙规则

[root@controller0 ~]# ovn-nbctl acl-list switch id

#查看网络端口

[root@controller0 ~]# ovn-nbctl show

port 30509675-60b6-4add-9deb-271dae1a9b0c #finance-server4的port addresses: ["fa:16:3e:72:1c:08 192.168.1.8"]

#查看路由器上的的防火墙规则

[root@controller0 ~]# ovn-nbctl acl-list neutron-7a6556ab-6083-403e-acfc- 79caf3873660 | grep 30509675-60b6-4add-9deb-271dae1a9b0c

from-lport 1002 (inport == "30509675-60b6-4add-9deb-271dae1a9b0c" && ip4) allow-related

from-lport 1002 (inport == "30509675-60b6-4add-9deb-271dae1a9b0c" && ip4 && ip4.dst == {255.255.255.255, 192.168.1.0/24} && udp && udp.src == 68 && udp.dst == 67) allow

from-lport 1002 (inport == "30509675-60b6-4add-9deb-271dae1a9b0c" && ip6) allow-related

from-lport 1001 (inport == "30509675-60b6-4add-9deb-271dae1a9b0c" && ip) drop

to-lport 1002 (outport == "30509675-60b6-4add-9deb-271dae1a9b0c" && ip4

&& ip4.src == $as_ip4_952467e9_b667_44ba_adb6_878c2e089308) allow-related

to-lport 1002 (outport == "30509675-60b6-4add-9deb-271dae1a9b0c" && ip4

&& ip4.src == 0.0.0.0/0 && icmp4) allow-related

to-lport 1002 (outport == "30509675-60b6-4add-9deb-271dae1a9b0c" && ip4

&& ip4.src == 0.0.0.0/0 && tcp && tcp.dst == 22) allow-related

to-lport 1002 (outport == "30509675-60b6-4add-9deb-271dae1a9b0c" && ip4

&& ip4.src == 0.0.0.0/0 && tcp && tcp.dst == 443) allow-related

to-lport 1002 (outport == "30509675-60b6-4add-9deb-271dae1a9b0c" && ip4

&& ip4.src == 0.0.0.0/0 && tcp && tcp.dst == 80) allow-related

to-lport 1002 (outport == "30509675-60b6-4add-9deb-271dae1a9b0c" && ip6

&& ip6.src == $as_ip6_952467e9_b667_44ba_adb6_878c2e089308) allow-related

to-lport 1001 (outport == "30509675-60b6-4add-9deb-271dae1a9b0c" && ip)

drop

#ovs中的插件调用了防火墙的插件来使用防火墙规则

[root@controller0 net]# lsmod

nf_conntrack 133053 8 openvswitch,nf_nat,nf_nat_ipv4,nf_nat_ipv6,xt_conntrack,nf_conntrack_netlink ,nf_conntrack_ipv4,nf_conntrack_ipv6

[root@controller0 net]# modinfo nf_conntrack

filename: /lib/modules/3.10.0-

862.9.1.el7.x86_64/kernel/net/netfilter/nf_conntrack.ko.xz

对照安全组规则查看

查看路由规则实现

# 查看路由的id

[root@controller0 ~]# ovn-nbctl lr-list

7e576467-0daf-4e5d-955a-a867d7369db8 (neutron-70c05d72-87e0-4318-aea1-966d850465cc)

# 查看路由器上的nat规则

[root@controller0 ~]# ovn-nbctl lr-nat-list neutron-70c05d72-87e0-4318-aea1-966d850465cc

TYPE EXTERNAL_IP LOGICAL_IP EXTERNAL_MAC LOGICAL_PORT

snat 172.25.250.110 192.168.1.0/24

# 给虚拟机绑定一个浮动ip

[student@workstation ~(architect1-finance)]$ openstack network list

[student@workstation ~(architect1-finance)]$ openstack floating ip create provider-datacentre

[student@workstation ~(architect1-finance)]$ openstack floating ip list

[student@workstation ~(architect1-finance)]$ openstack server add floating ip finance-server3 172.25.250.104

# 再次查看nat路由信息

[root@controller0 ~]# ovn-nbctl lr-nat-list neutron-70c05d72-87e0-4318-aea1-966d850465cc

TYPE EXTERNAL_IP LOGICAL_IP EXTERNAL_MAC LOGICAL_PORT

dnat_and_snat 172.25.250.104 192.168.1.10 fa:16:3e:d9:f8:02 6dae0329-5d49-49b2-98b0-f164cc1fe66e

snat 172.25.250.110 192.168.1.0/24

在南向网络中查看流表信息

在控制节点查看总的流表信息,来自于数据库对整个网络的汇总信息

# 查看云主机在ovs上的port id

[student@workstation ~(architect1-finance)]$ openstack port list 30509675-60b6-4add-9deb-271dae1a9b0c | fa:16:3e:72:1c:08 |ip_address='192.168.1.8', subnet_id='4836a9dd-e01f-4a33-a971-fa49dec9ffd5' |ACTIVE |

# 或者:

[root@controller0 ~]# ovn-nbctl show

port 30509675-60b6-4add-9deb-271dae1a9b0c #finance-server4的port addresses:["fa:16:3e:72:1c:08 192.168.1.8"]

# 查看实例对应port的相关信息流

[root@controller0 ~]# ovn-sbctl lflow-list | grep 30509675-60b6-4add-9deb-271dae1a9b0c

# 查看dhcp分配的所有地址

[root@controller0 ~]# ovn-sbctl lflow-list | grep offerip

# 查看nat的信息

[root@controller0 ~]# ovn-sbctl lflow-list | grep nat

在计算节点查看各节点的真实的流表信息,控制节点负责定义网络信息,计算节点的ovn-controller服务 收到请求,去实现具体的功能;

[root@compute1 ~]# docker ps | grep ovn

e2c26290b12a 172.25.249.200:8787/rhosp13/openstack-ovn-controller:latest "kolla_start" 20 months ago Up 22hours ovn_controller

9aec1c0ab9c5 172.25.249.200:8787/rhosp13/openstack-neutron-metadata-agent-ovn:latest "kolla_start" 20 months ago Up 22 hours(health) ovn_metadata_agent

#在计算节点查看具体的流表信息

[root@compute1 ~]# ovs-ofctl dump-flows br-int | grep nat

[root@controller0 ~]# ovs-ofctl show br-trunk

OFPT_FEATURES_REPLY (xid=0x2): dpid:0000525400010001

n_tables:254, n_buffers:0

capabilities: FLOW_STATS TABLE_STATS PORT_STATS QUEUE_STATS ARP_MATCH_IP

actions: output enqueue set_vlan_vid set_vlan_pcp strip_vlan mod_dl_src

mod_dl_dst mod_nw_src mod_nw_dst mod_nw_tos mod_tp_src mod_tp_dst

1(eth1): addr:52:54:00:01:00:01

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

2(vlan10): addr:fe:3a:e2:38:5a:39

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

3(vlan20): addr:c2:d7:6e:3b:48:c7

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

4(vlan30): addr:a6:91:4b:0a:10:09

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

5(vlan40): addr:9a:ee:fe:1b:d0:24

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

6(vlan50): addr:76:f2:58:0e:8c:13

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

LOCAL(br-trunk): addr:52:54:00:01:00:01

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

OFPT_GET_CONFIG_REPLY (xid=0x4): frags=normal miss_send_len=0

重点说明:带VNI标签的数据包被geneve隧道封装后,走vlan20通讯

查看geneve流量在租户网络vlan20(172.24.2.0/24)中传输

[student@workstation ~(architect1-finance)]$ openstack server list

+--------------------------------------+------+--------+-------------------------------+-------+---------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+------+--------+-------------------------------+-------+---------+

| 3b9ef7e8-cd48-4c2f-9b81-60cade80d854 | web2 | ACTIVE | finance-network1=192.168.1.12 | rhel7 | default |

| 67445708-c862-40bd-ab21-7cb01875a5b5 | web1 | ACTIVE | finance-network1=192.168.1.8 | rhel7 | default |

+--------------------------------------+------+--------+-------------------------------+-------+---------+

#在计算节点上的vlan20接口上抓包查看

[root@compute1 ~]# tcpdump -ten -i vlan20 | grep ICMP

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on vlan20, link-type EN10MB (Ethernet), capture size 262144 bytes e2:6a:ed:98:21:43 > d2:ed:bc:5d:dc:75, ethertype IPv4 (0x0800), length 156: 172.24.2.12.24438 > 172.24.2.2.6081: Geneve, Flags [C], vni 0x4, proto TEB (0x6558), options [8 bytes]: fa:16:3e:ba:a7:84 > fa:16:3e:b5:7f:df, ethertype IPv4 (0x0800), length 98: 192.168.1.8 > 192.168.1.12: ICMP echo request, id 1318, seq 1, length 64

d2:ed:bc:5d:dc:75 > e2:6a:ed:98:21:43, ethertype IPv4 (0x0800), length 156: 172.24.2.2.25470 > 172.24.2.12.6081: Geneve, Flags [C], vni 0x4, proto TEB (0x6558), options [8 bytes]: fa:16:3e:b5:7f:df > fa:16:3e:ba:a7:84, ethertype IPv4 (0x0800), length 98: 192.168.1.12 > 192.168.1.8: ICMP echo reply, id 1318, seq 1, length 64

#说明: finance-network1的VNI为0x4

创建两个网络,他们有各自的VNI 标签,并且web2和web3在不同的计算节点上,它们之间互访走 vlan20

[student@workstation ~(developer1-finance)]$ openstack server list

+--------------------------------------+------+--------+-------------------------------+-------+---------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+------+--------+-------------------------------+-------+---------+

| 18a4252c-969e-4bbf-90e1-c81765a3fc5a | web3 | ACTIVE | finance-network2=192.168.2.4 | rhel7 | default |

| 3b9ef7e8-cd48-4c2f-9b81-60cade80d854 | web2 | ACTIVE | finance-network1=192.168.1.12 | rhel7 | default |

| 67445708-c862-40bd-ab21-7cb01875a5b5 | web1 | ACTIVE | finance-network1=192.168.1.8 | rhel7 | default |

+--------------------------------------+------+--------+-------------------------------+-------+---------+

[root@compute0 ~]# tcpdump -ten -i vlan20 | grep ICMP

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on vlan20, link-type EN10MB (Ethernet), capture size 262144 bytes

e2:6a:ed:98:21:43 > d2:ed:bc:5d:dc:75, ethertype IPv4 (0x0800), length 156:

172.24.2.12.29342 > 172.24.2.2.6081: Geneve, Flags [C], vni 0x4, proto TEB

(0x6558), options [8 bytes]: fa:16:3e:1b:08:3e > fa:16:3e:b5:7f:df,

ethertype IPv4 (0x0800), length 98: 192.168.2.4 > 192.168.1.12: ICMP echo

request, id 1341, seq 1, length 64

d2:ed:bc:5d:dc:75 > e2:6a:ed:98:21:43, ethertype IPv4 (0x0800), length 156:

172.24.2.2.40524 > 172.24.2.12.6081: Geneve, Flags [C], vni 0x6, proto TEB

(0x6558), options [8 bytes]: fa:16:3e:44:47:9c > fa:16:3e:05:13:ab,

ethertype IPv4 (0x0800), length 98: 192.168.1.12 > 192.168.2.4: ICMP echo

reply, id 1341, seq 1, length 64

#说明:

finance-network1 的VNI标签是0x4 finance-network2 的VNI标签是0x6

OVN DHCP:

- OVN 实施 DHCPv4,不需要 DHCP 代理(DHCP agent)虚拟网络不再需要 DHCP 命名空间 (namespace)或 dnsmasq进程

- 运行 ovn-controller 的每个计算节点都配置了 DHCPv4 选项(DHCPv4 options),这意味着 DHCP 支持完全分布式,来自虚拟机实例的 DHCP 请求由 ovn-controller 处理

- OVN 北向数据库中的 DHCP_Options 表存储 DHCP 选项如果 enable_dhcp 选项设为True,则数 据库会在创建子网时新建一个条目

- DHCP_Options 表 options 列中存储的 DHCPv4 选项包括 ovncontroller 在接收 DHCPv4 请求时 发出的回复

$ ovn-nbctl list DHCP_Options:查看 OVN 北向数据库中的 DHCP_Options 表

[root@controller0 ~]# ovn-nbctl list DHCP_Options

_uuid : 9a09543e-f937-4110-bc86-dc6a7464ba53

cidr : "192.168.1.0/24"

external_ids : {"neutron:revision_number"="0",subnet_id="4836a9dde01f-4a33-a971-fa49dec9ffd5"}

options : {classless_static_route="{169.254.169.254/32,192.168.1.2, 0.0.0.0/0,192.168.1.1}", dns_server="{172.25.250.254}", lease_time="43200", mtu="1442", router="192.168.1.1",server_id="192.168.1.1", server_mac="fa:16:3e:9f:1a:b5"}

[root@compute0 ~]# ip netns ls

ovnmeta-b356e2b4-6708-4829-87c5-4386e6adc10b (id: 0)

[root@compute0 ~]# ip netns exec ovnmeta-b356e2b4-6708-4829-87c5-

4386e6adc10b ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group

default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: tapb356e2b4-61@if20: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc

noqueue state UP group default qlen 1000

link/ether fa:16:3e:e3:12:fd brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.1.2/24 brd 192.168.1.255 scope global tapb356e2b4-61

valid_lft forever preferred_lft forever

inet 169.254.169.254/16 brd 169.254.255.255 scope global tapb356e2b4-61

valid_lft forever preferred_lft forever

OVN/OVS 相关命令汇总:

使用如下命令时,需指定OVN_NB_DB或OVN_SB_DB 环境变量

export OVN_SB_DB=tcp:172.24.1.50:6642

export OVN_NB_DB=tcp:172.24.1.50:6641

北向数据库(OVN Northbound Database)相关命令:

$ ovs-vsctl list Open_vSwitch:查看 OVN 北向与南向数据库ovn-remote 参数

$ ovn-nbctl show [<ovn_logical_switch>|<ovn_logical_router>]:查看 OVN 北向数 据库的逻辑交换机与路由器的信息

$ ovn-nbctl ls-list:查看 OVN 逻辑交换机

$ ovn-nbctl lr-list:查看 OVN 逻辑路由器

$ ovn-nbctl lsp-list <ovn_logical_switch>:查看 OVN 逻辑交换机端口

$ ovn-nbctl lrp-list <ovn_logical_router>:查看 OVN 逻辑路由器端口

$ ovn-nbctl lr-route-list <ovn_logical_router>:查看 OVN 逻辑路由器的路由信息

$ ovn-nbctl lr-nat-list <ovn_logical_router>:查看 OVN 逻辑路由器的 NAT 信息

$ ovn-nbctl dhcp-options-list:查看 OVN 的 DHCP 信息

$ ovn-nbctl dhcp-options-get-options <dhcp_options_uuid>:查看指定 DHCP 的详细 信息

$ ovn-nbctl list <ovn_nb_db_table>:查看 OVN 北向数据库的指定表

$ ovn-nbctl acl-list <ovn_logical_switch>:查看 OVN 逻辑交换机的 ACL 规则(安全组 规则)

OVN 南向数据库(OVN Southbound Database)相关命令:

$ ovn-sbctl show:查看OVN南向数据库的信息

$ ovn-sbctl lflow-list:查看 OVN 南向数据库的逻辑流信息

$ ovn-sbctl list [Logical_Flow|Port_Binding|Chassis]:查看OVN南向数据库中指定表的 详细信息,如逻辑流表、端口绑定表等

OVS 与 OpenFlow 相关命令:

$ ovs-vsctl show:查看 OVS 网桥与端口的详细信息

$ ovs-ofctl show [<ovs_switch>]:查看OVS网桥与端口的flow信息

$ ovs-ofctl dump-ports-desc <ovs_switch>:查看OVS网桥的端口列表详情 $ ovs-ofctl dump-tables <ovs_switch>:查看OVS的OpenFlow流表

$ ovs-ofctl dump-flows <ovs_switch>:查看OVS的OpenFlow流表

管理计算资源

描述实例启动流程

- 通过使用 dashboard 或命令,以 REST API 调用的形式将用户 的账户凭据发送到 Keystone。身份 验证成功后,生成 token 并返回 给用户

- 实例启动请求和 token 作为 REST API 调用发送到 Nova 服 务。 nova-api 将请求转发给Keystone 服务,其验证token 并在更新 的 token 标头中返回允许的角色和权限

- nova-api 为新实例创建数据库条目。其他进程从此条目获取数 据,并将当前状态更新至此持久目

- nova-api 将 rpc.call 发布到消息队列,请求 nova-scheduler 查找可用的计算节点来运行新实例

- nova-scheduler 订阅新实例请求,从实例数据库条目读取过滤 和权重参数,从数据库读取集群计

算节点数据, 并使用所选计算节点 ID 更新实例记录。nova-scheduler 将 rpc.call 提交到消息队

列,请求 nova-compute 发起实例启动 - nova-compute 订阅新实例请求,然后发布 rpc.call 使 nova_conductor 准备实例启动

- nova-conductor 订阅新实例请求,读取数据库条目以获取计算 节点 ID 以及请求的 RAM、vCPU

和 flavor,再将新实例状态发布至消息队列 - nova-compute 订阅新实例请求,以检索实例信息。通过使用实 例请求中的镜像ID,nova-

compute 发送 REST API 调用到 Glance 服务以获取镜像的URL。glance-api 服务将请求转发给

Keystone 服 务,后者再次验证 token 并在更新的token标头中返回允许的角色和 权限 - 通过使用镜像 ID,glance-api 从 Glance 数据库检索镜像元数 据,再将镜像 URL 返回给 nova-

compute。nova-compute 使用该 URL 加载镜像 - nova-compute 发送 REST API 调用到 Neutron 服务,请求为 新实例分配和配置网络资源。

neutron-server 将请求转发给 Keystone 服务,后者再次验证 token 并在更新的 token 标头中返

回 允许的角色和权限 - eutron-server 作为 CMS(cloud management system)插 件读取逻辑网络资源定义并写入到

ovn-nb 数据库。neutron-server 检查现有网络,并为所需端口、连接和网络参数创建新资源 - ovn-northd 进程读取 ovn-nb 数据库并将逻辑网络配置转换 为 ovn-sb 数据库中的逻辑数据路径流

(logical datapath)。其他 物理网络和端口绑定由所选计算节点上的 ovn-controller 填充 - ovn-controller 从 ovn-sb 数据库中读取配置并更新物理网络和 绑定表中的计算节点状态。 ovn-controller 连接到作为 OpenFlow 控 制器的 ovs-vswitchd,通过 OVS bridge 上的 OpenFlow 规 则,动 态配置对网络流量的控制。neutron-server 将从计算节点 libvirt 驱动 程序获取的 L2 配置 和请求的 DHCP 地址返回到消息队列。nova_compute将此实例网络状态写入到实例数据库条目

- nova-compute 发送 REST API 调用到 Cinder 服务,请求新实 例磁盘。cinder-api 将请求转发给 Keystone 服务,后者再次验证 token 并在更新的 token 标头中返回允许的角色和权限

- cinder-api 将 rpc.call 发布到命名的消息队列,请求 cinder_scheduler 创建请求大小的卷,或查 找已存在的卷的元数据

- cinder-scheduler 订阅新实例请求,创建或查找卷,然后将请 求的元数据返回到 nova-scheduler

- nova-compute 为计算节点驱动程序生成数据,并使用 libvirt 执行请求在计算节点上创建虚拟机。

nova-compute 将卷信息传递给 libvirt - cinder-volume 订阅新实例请求,并检索集群映射。然后,libvirt 在新实例上安装卷

介绍红帽超融合架构

练习:在超融合节点发放实例

初始化系统环境

[student@workstation ~]$ lab computeresources-hci setup

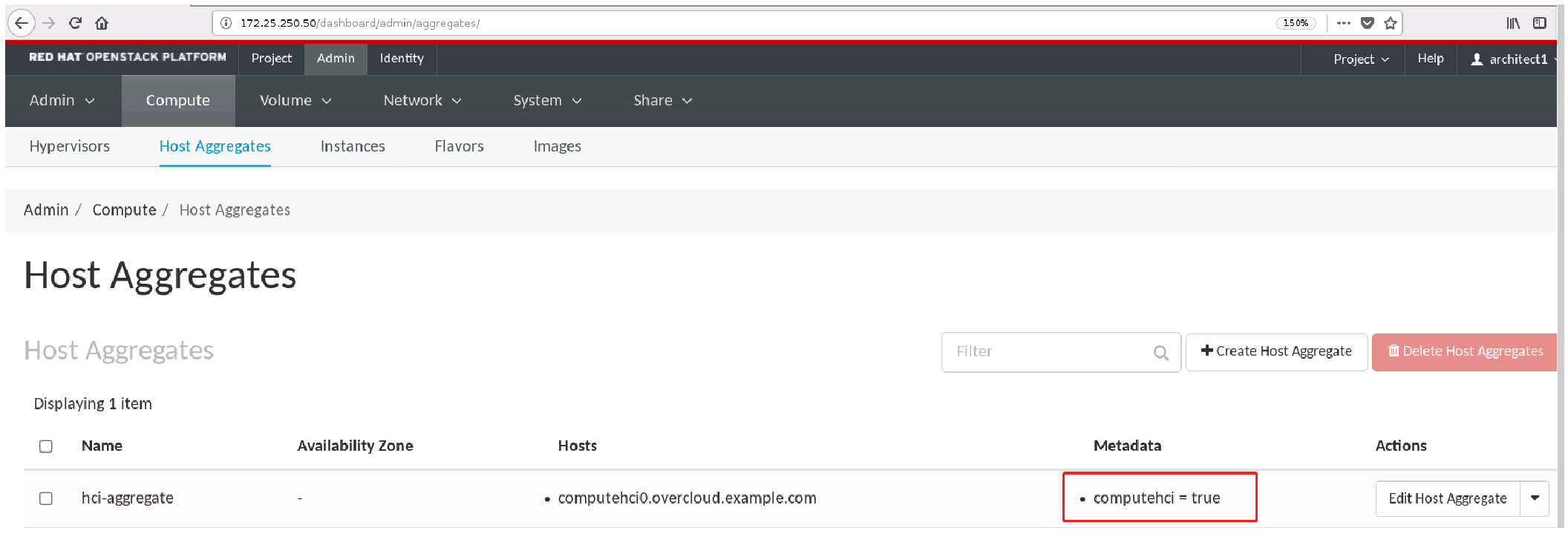

- 创建hci-aggregate主机集合

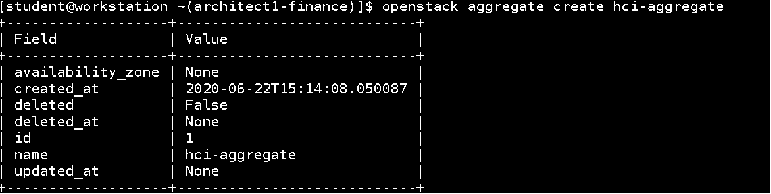

[student@workstation ~(architect1-finance)]$ openstack aggregate create hci- aggregate

- 将加入该主机集合

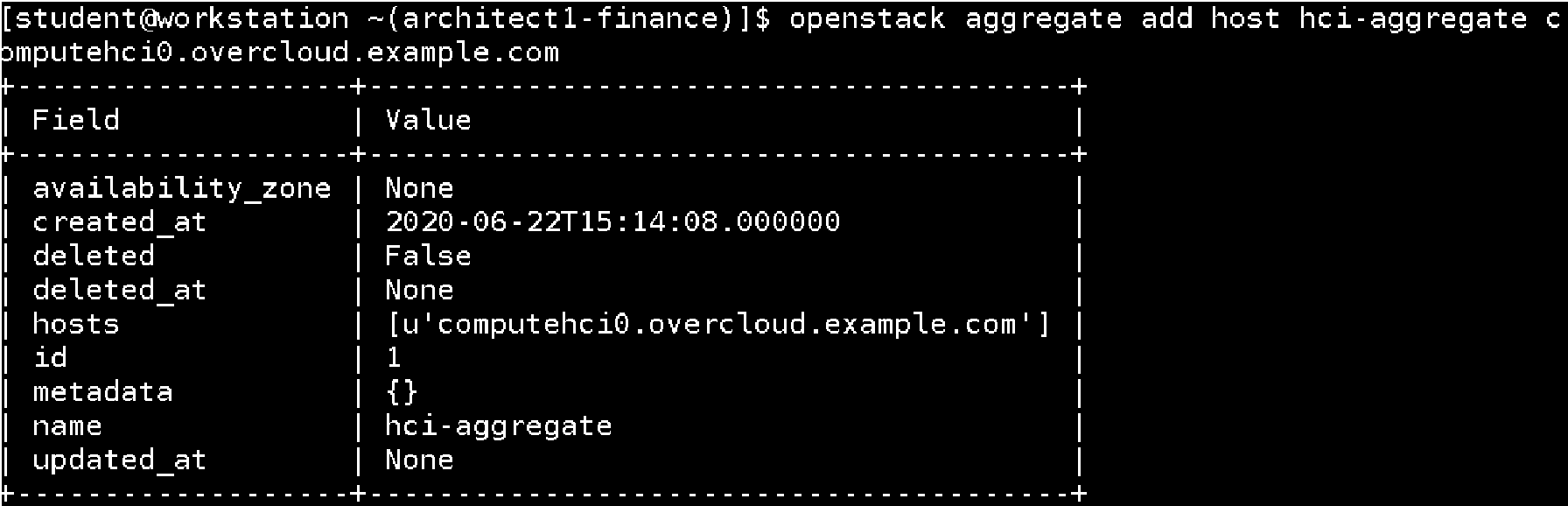

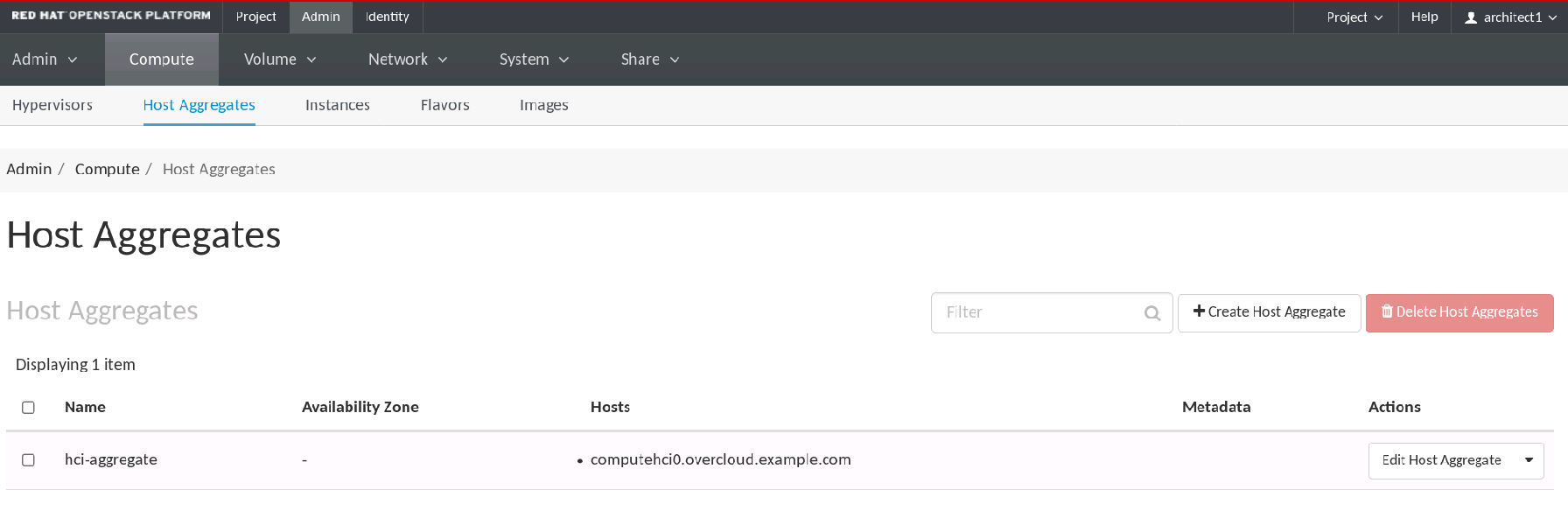

[student@workstation ~(architect1-finance)]$ openstack aggregate add host hci-aggregate computehci0.overcloud.example.com

- 设置主机聚合的元数据

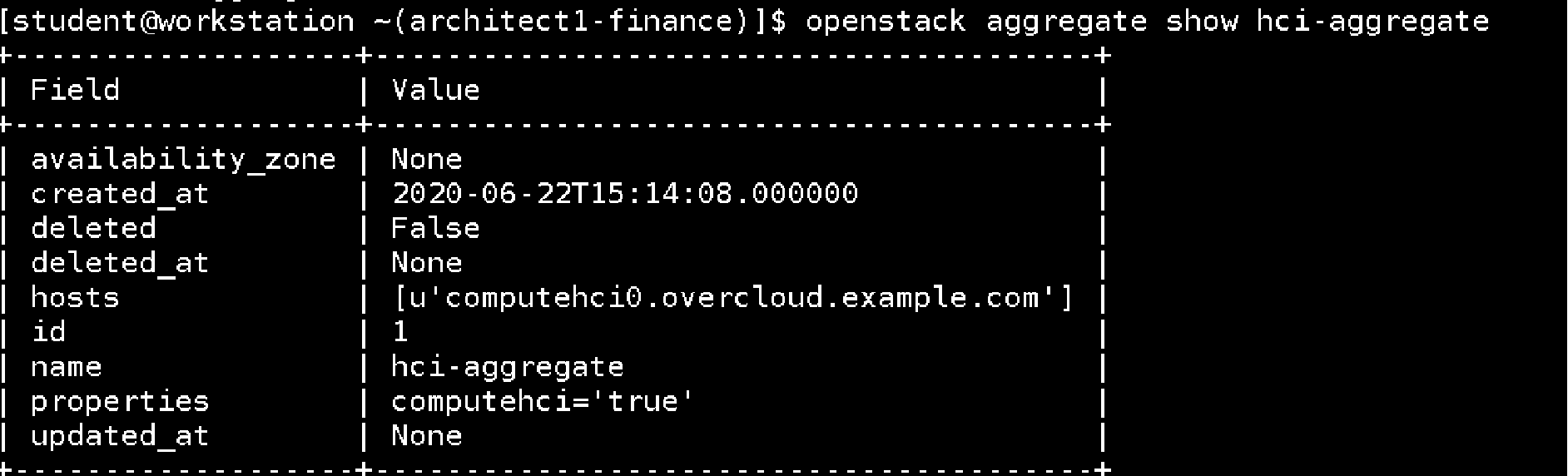

computehci=true

[student@workstation ~(architect1-finance)]$ openstack aggregate set --

property computehci=true hci-aggregate

[student@workstation ~(architect1-finance)]$ openstack aggregate show hci-

aggregate

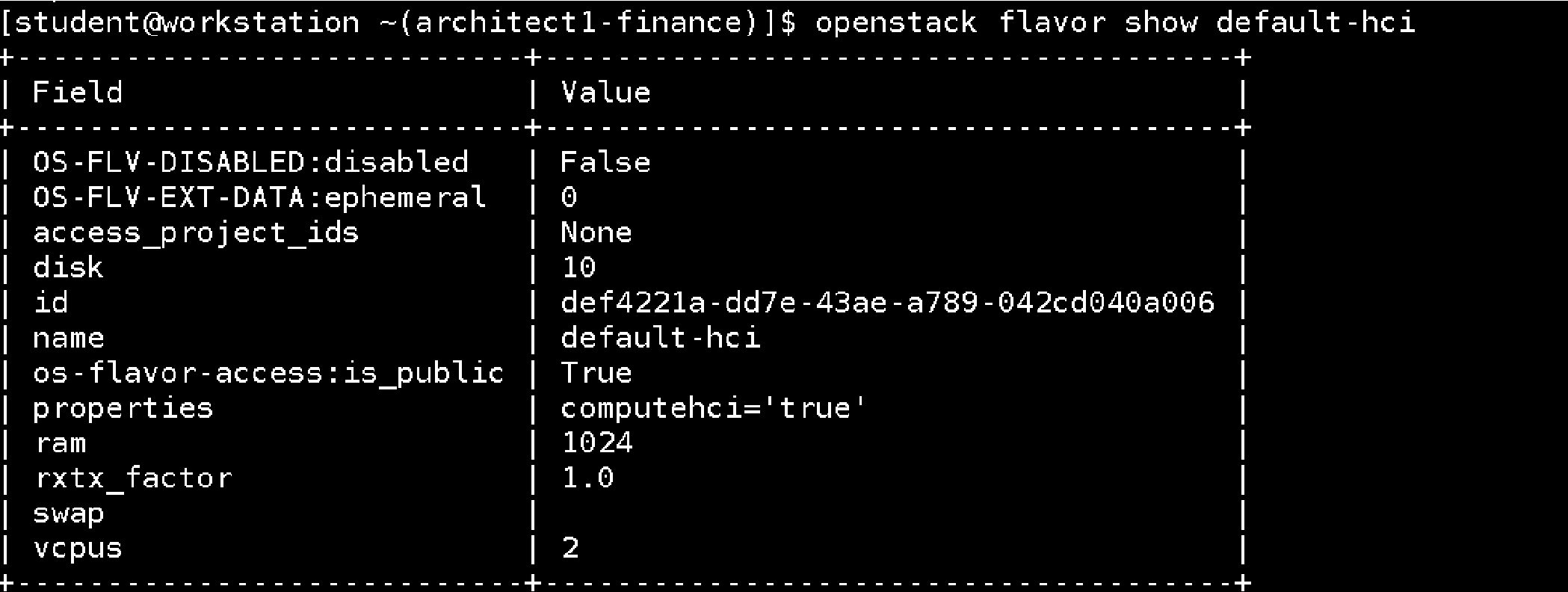

创建flavor

[student@workstation ~(architect1-finance)] $openstack flavor create --ram 1024 --disk 10 --vcpus 2 --public default-hci

- 在flavor的元数据中添加相同的标签

computehci='true'

说明:“主机聚合”需要配合flavor一起使用,同时在flavor中也需要配置相关”标签”,这样通过flavor创建 的虚拟机才会选择相关“主机聚合”中的主机

[student@workstation ~(architect1-finance)]$ openstack flavor set default-hci --property computehci='true'

[student@workstation ~(architect1-finance)]$ openstack flavor show default-hci

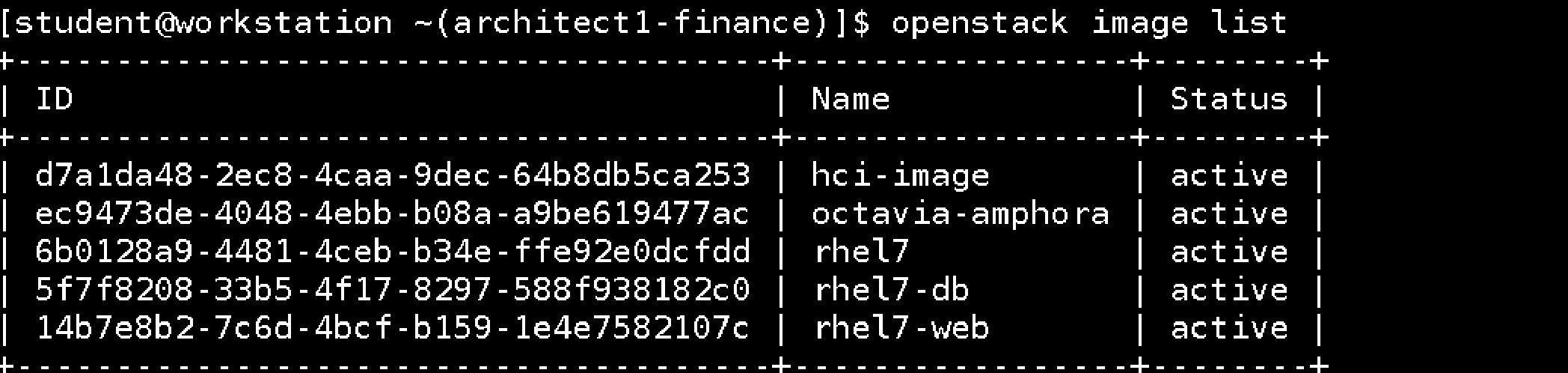

- 查看镜像

[student@workstation ~(architect1-finance)]$ openstack image list

- 创建云主机

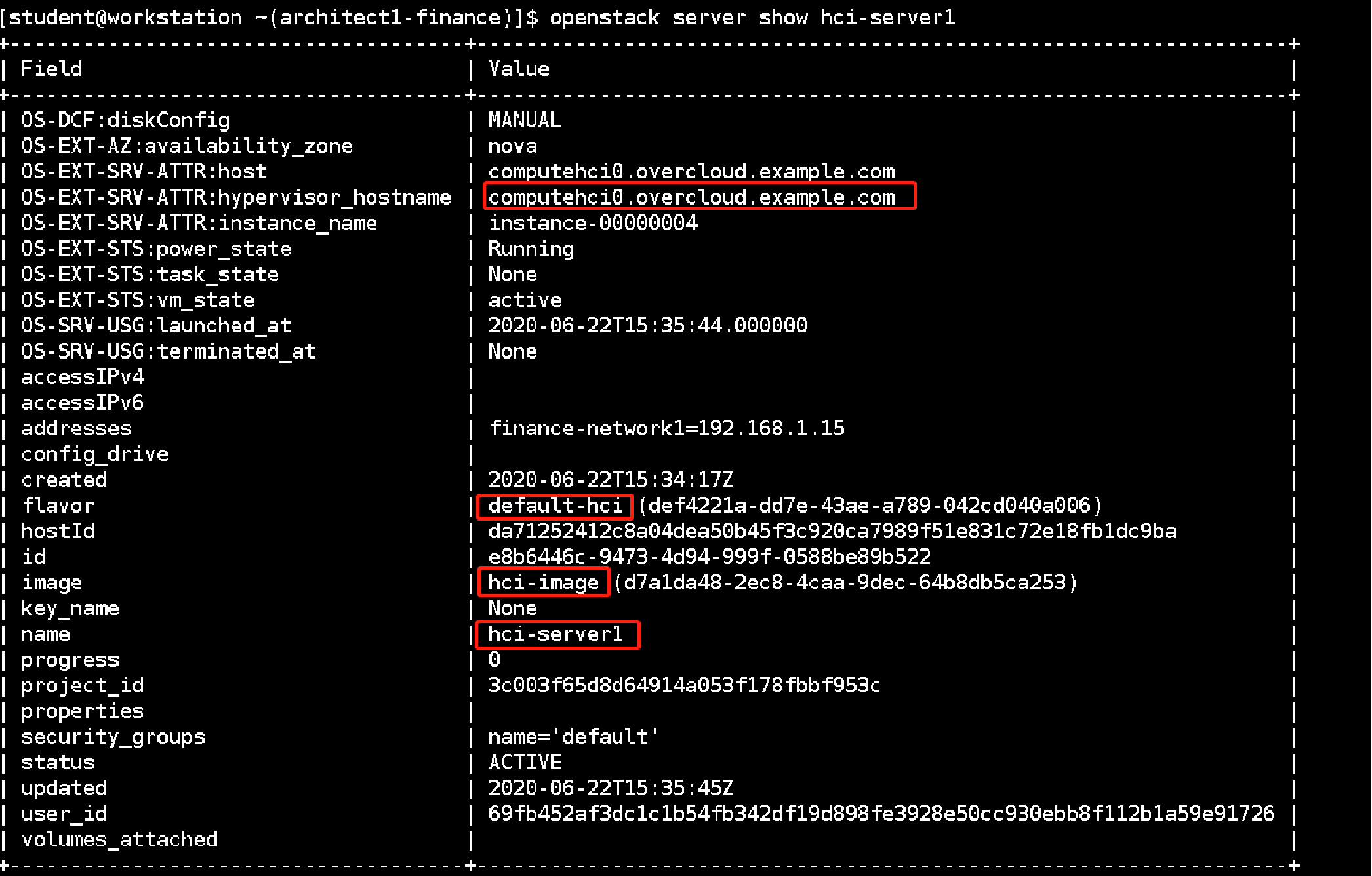

[student@workstation ~(architect1-finance)]$ openstack server create --flavor default-hci --image hci-image --nic net-id=finance-network1 --wait hci- server1

[student@workstation ~(architect1-finance)]$ openstack server show hci-server1

以上说明,该实例被调度创建在了超融合节点上

清除环境

[student@workstation ~(architect1-finance)]$ lab compute resources-hci cleanup

管理计算节点

云主机热迁移

- 初始化环境

[student@workstation ~]$ lab compute resources-migrate setup

- 列出所有的云主机

[student@workstation ~]$ source architect1-finance-rc

[student@workstation ~(architect1-finance)$ openstack server list

- 查看所有计算节点上面的服务

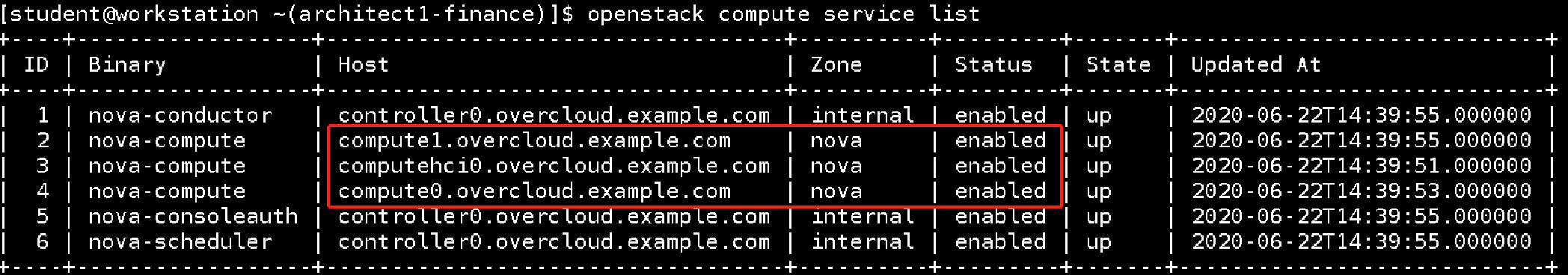

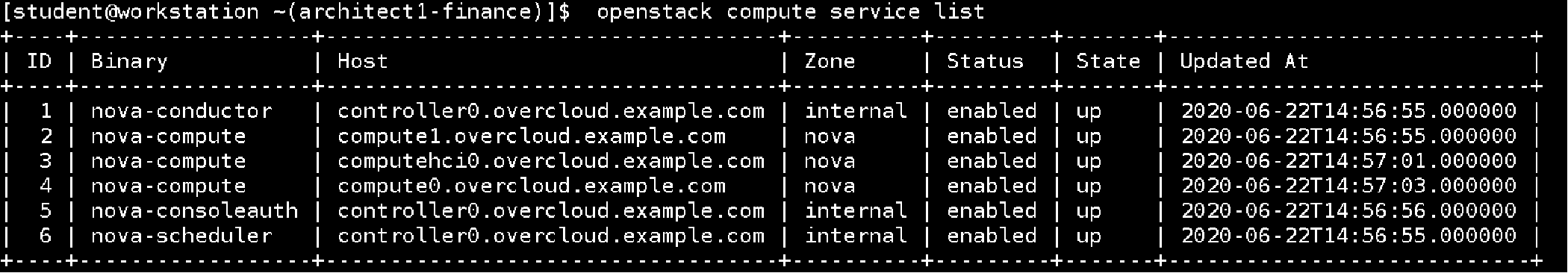

[student@workstation ~(architect1-finance)]$ openstack compute service list

重新开启compute0上的nova-compute服务

[student@workstation ~(architect1-finance)]$ openstack compute service set compute0.overcloud.example.com nova-compute --enable

[student@workstation ~(architect1-finance)]$ openstack compute service list

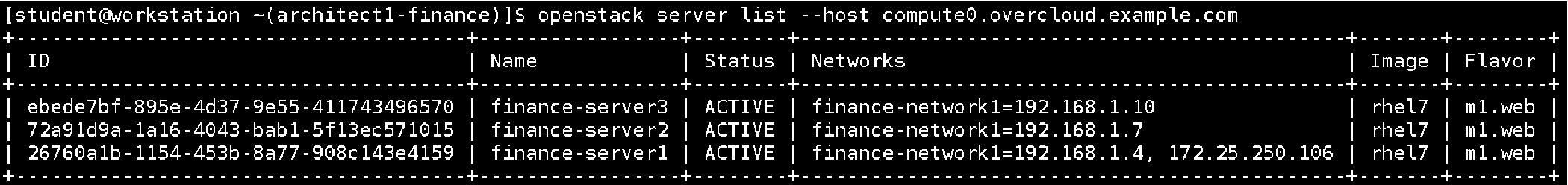

再次将compute1上面的实例迁移至其他计算节点

[student@workstation ~(architect1-finance)]$ nova host-evacuate-live compute1

[student@workstation ~(architect1-finance)]$ openstack server list --host compute0.overcloud.example.com

清空环境

[student@workstation ~]$ lab compute resources-migrate cleanup

自动化应用

#列出所有支持的模板

[student@workstation ~(developer1-finance)]$ openstack orchestration template version list

+--------------------------------------+------+------------------------------+

| Version | Type | Aliases |

+--------------------------------------+------+------------------------------+

| AWSTemplateFormatVersion.2010-09-09 | cfn | |

| HeatTemplateFormatVersion.2012-12-12 | cfn | |

| heat_template_version.2013-05-23 | hot | |

| heat_template_version.2014-10-16 | hot | |

| heat_template_version.2015-04-30 | hot | |

| heat_template_version.2015-10-15 | hot | |

| heat_template_version.2016-04-08 | hot | |

| heat_template_version.2016-10-14 | hot | heat_template_version.newton |

| heat_template_version.2017-02-24 | hot | heat_template_version.ocata |

| heat_template_version.2017-09-01 | hot | heat_template_version.pike |

| heat_template_version.2018-03-02 | hot | heat_template_version.queens |

+--------------------------------------+------+------------------------------+

#queens对应osp13;newton对应osp10

[student@workstation ~(developer1-finance)]$ openstack orchestration resource type list

+----------------------------------------------+

| ResourceType |

+----------------------------------------------+

| AWS::AutoScaling::AutoScalingGroup |

| AWS::AutoScaling::LaunchConfiguration |

| AWS::AutoScaling::ScalingPolicy |

[student@workstation ~(developer1-finance)]$ openstack orchestration resource type show OS::Nova::Server

- 编辑模板文件,并创建堆栈

#下载模板

[student@workstation ~]$ wget http://materials.example.com/heat/finance- app1.yaml-final

#编辑变量文件

[student@workstation ~]$ cat environment.yaml parameters:

web_image_name: "rhel7-web"

db_image_name: "rhel7-db"

web_instance_name: "fianace-web"

db_instance_name: "fianace-db"

instance_flavor: "default"

key_name: "example-keypair"

public_net: "provider-datacentre"

private_net: "finance-network1"

private_subnet: "finance-subnet1"

#试运行

[student@workstation ~(developer1-finance)]$ openstack stack create --environment environment.yaml --template finance-app1.yaml --dry-run -c escription finance-app1

#创建堆栈

[student@workstation ~(developer1-finance)]$ openstack stack create --environment environment.yaml --template finance-app1.yaml --wait finance-app1

[student@workstation ~(developer1-finance)]$ openstack stack output list finance-app1

+----------------+---------------------------------------------------------------------------+

| output_key | description |

+----------------+---------------------------------------------------------------------------+

| web_private_ip | PrivateIPaddressofthewebserver |

| web_public_ip | External IP address of the web server |

| website_url | This URL is the "external" URL that can be used to access the web server. |

| | |

| db_private_ip | Private IP address of the DB server |

+----------------+---------------------------------------------------------------------------+

[student@workstation ~(developer1-finance)]$ openstack stack output show finance-app1 website_url

+--------------+---------------------------------------------------------------------------+

| Field | Value |

+--------------+---------------------------------------------------------------------------+

| description | This URL is the "external" URL that can be used to access the web server. |

| | |

| output_key. | website_url |

| output_value.| http://172.25.250.105/index.php |

+--------------+---------------------------------------------------------------------------+

[student@workstation ~(developer1-finance)]$ curl http://172.25.250.105/index.php

清除环境

[student@workstation ~]$ lab orchestration-heat-templates cleanup