[toc]

kubernetes 操作记录六

调度器、预选策略及优选函数

整个调度大致可以分为三个过程: Predicate(预先) –> Priority(优选) –> Select(选定)

调度器:

预选策略:

CheckNodeCondition: 检查节点本身是否正常;

GeneralPredicates:

HostName: 检查Pod对象是否定义了 pod.spec.hostname,

PodFitsHostPorts:pods.spec.containers.ports.hostPort

MatchNodeSelector: pods.sepc.nodeSelector

PodFitsResources: 检查Pod的资源需求是否能被节点的需求所满足

NoDiskConflicts:检查Pod依赖的存储卷是否能满足需求;

PodToleratesNodeTaints: 检查Pod上的spec.tolerations可容忍的污点是否完全包含节点上的污点;

PodToleratesNodeNoExecuteTaints: 不能执行的污点,这个预选策略默认是不检查的;

CheckNodeLabelPresence: 检查节点指定标签的存在性;

CheckServiceAffinity: 把这个Pod调度到它所属的Service,已经调度完成其它Pod所在的节点上去;

MaxEBSVolumeCount:

MaxGCEPDVolumeCount:

MaxAzureDiskVolumeCount:

CheckVolumeBinding:

NoVolumeZoneConflict:

CheckNodeMemoryPressure:检查节点内存资源是否处于压力过大的状态;

CheckNodePidPressure:检查PId状态是否紧缺;

CheckNodeDiskPressure:

MatchInterPodAffity:

优选函数

LeastRequested:

(cpu(capacity-sum(requested))*10/capacity)+memory((capacity-sum(requested))*10/capacity))/2

BalancedResourceAllocation:

CPU和内存资源被占用率相近的胜出;

NodePreferAvoidPods:

节点注解信息”scheduler .alpha.kubernetes.io/preferAvoidPods”

TaintToleration: 将Pod对象的spec.tolerations列表项与节点的taints列表项进行匹配度检查,匹配条目越多,得分越低;

SelectorSpreading: 标签选择器的分散度,与当前Pod对象同属的标签选择器,选择适配的其它Pod的对象所在的节点,越多的,得分越底,否则得分越高;

InterPodAffinity: 遍历Pod的亲和性条目,并将那些能够匹配到给定节点的条目相加,结果值越大,得分越低;

NodeAffinity: 根据NodeSelecter进行匹配度检查,能成功匹配的越多,得分就越高;

MostRequested: 空闲量越小的,得分越大。他会尽量把一个节点的资源先用完;

NodeLabel: 标签越多,得分越高;

ImageLocality: 此节点是否有此Pod 所需要的镜像,拥有镜像越多的,得分越高,而它是根据镜像体积大小之和来计算的;

kubernetes 高级调度方式

节点选择器: nodeSelector, nodeName

节点亲和性调度: nodeAffinity

注: 当调度选择器选择了不存的标签时,Pod 会成为Pending状态

nodeSelector:

disktype: harddisk

节点亲和性调度;

硬亲和

# vim pod-nodeaffinity-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-node-affinity-demo

labels:

app: myapp

tier: frontend

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v1

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: zone

operator: In

values:

- foo

- bar

软亲和

# vIm pod-nodeaffinity-demo-2.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-node-affinity-demo-2

labels:

app: myapp

tier: frontend

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v1

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- preference:

matchExpressions:

- key: zone

operator: In

values:

- foo

- bar

weight: 60

# vim pod-required-affinity-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-first

labels:

app: myapp

tier: frontend

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v1

---

apiVersion: v1

kind: Pod

metadata:

name: pod-second

labels:

app: backend

tier: db

spec:

containers:

- name: busybox

image: busybox:latest

imagePullPolicy: IfNotPresent

command: ["sh","-c","sleep 3600"]

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- {key: app, operator: In, values: ["myapp"]}

topologyKey: kubernetes.io/hostname

注: podAntiAffinity 一定不会调度到同一节点上

taint 的effect定义对Pod排斥效果:

NoSchedule: 仅影响调度过程,对现存的Pod对象不产生影响;

NoExecute: 既影响调度过程,也影响现存的Pod对象; 不容忍的Pod对象将被驱逐;

PreferNoSchedule:

# kubectl taint node node01 node-type=production:NoSchedule

# kubectl taint node node02 node-type=dev:NoExecute

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deploy

namespace: default

spec:

replicas: 5

selector:

matchLabels:

app: myapp

release: canary

template:

metadata:

labels:

app: myapp

release: canary

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v2

ports:

- name: http

containerPort: 80

tolerations:

- key: "node-type"

operator: "Equal"

value: "production"

effect: "NoSchedule"

tolerations:

- key: "node-type"

operator: "Equal"

value: "production"

effect: "NoExecute"

tolerationSeconds: 3600

tolerations:

- key: "node-type"

operator: "Exists"

value: ""

effect: "NoExecute"

容器资源需求、限制、及HeapSter

requests: 需求,最低保障;

limits: 限制,硬限制;

limits 一般大于等于 requests

CPU: 1颗逻辑CPU

1逻辑核心=1000,millicores

500m = 0.5CPU

内存:

E、P、T、G、M、K

Ei、Pi

取消节点污点

# kubectl taint node node01 node-type-

apiVersion: v1

kind: Pod

metadata:

name: pod-demo

namespace: renjin

labels:

app: myapp

tier: frontend

spec:

containers:

- name: myapp

image: ikubernetes/stress-ng

command: ["/usr/bin/stress-ng", "-c 1", "--metrics-brief"]

resources:

requests:

cpu: "500m"

memory: "128Mi"

limits:

cpu: "500m"

memory: "512Mi"

QoS:

Guranteed: 每个容器同时设置CPU和内在的requests和limits,

cpu.limits=cpu.requests memory.limits=memory.request

Burstable:至少有一个容器设置CPU或内存资源的requests

BestEffort: 没有任何一个容器设置了requests或limits属性;最低优先级别; 是自动配置的;

配置influxdb

# wget https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/influxdb/influxdb.yaml

# 更改以下内容

apiVersion: apps/v1

spec:

replicas: 1

selector:

matchLabels:

task: monitoring

k8s-app: influxdb

spec:

containers:

- name: influxdb

image: registry.cn-hangzhou.aliyuncs.com/google_containers/heapster-influxdb-amd64:v1.5.2

# kubectl apply -f influxdb.yaml

配置heapster-rbac

# wget https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/rbac/heapster-rbac.yaml

# kubectl apply -f heapster-rbac.yaml

配置heapster

# wget https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/influxdb/heapster.yaml

# 修改以下内容

apiVersion: apps/v1

spec:

replicas: 1

selector:

matchLabels:

task: monitoring

k8s-app: heapster

image: registry.cn-hangzhou.aliyuncs.com/google_containers/heapster-amd64:v1.5.4

spec:

ports:

- port: 80

targetPort: 8082

type: NodePort

# kubectl apply -f heapster.yaml

# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

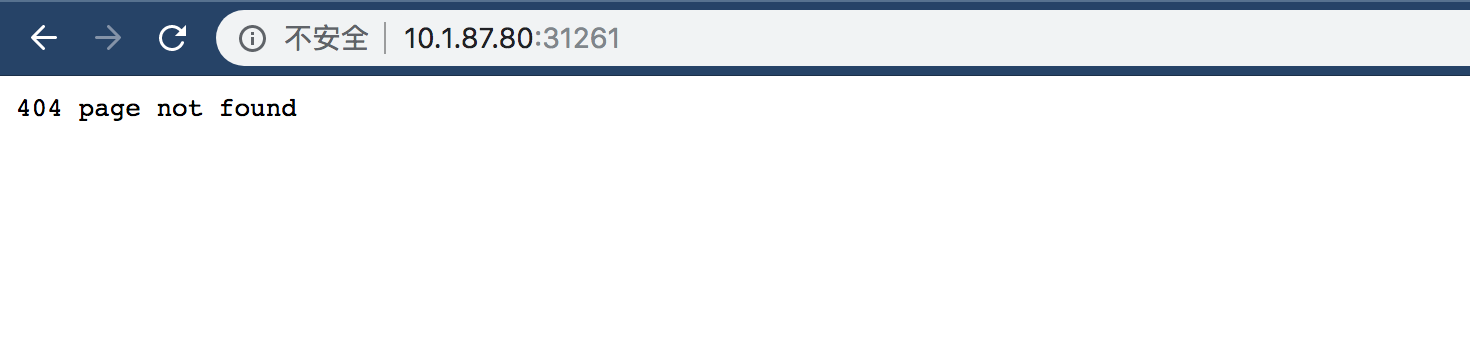

heapster NodePort 10.101.89.141 <none> 80:31261/TCP 63s

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 48d

kubernetes-dashboard NodePort 10.103.25.80 <none> 443:30660/TCP 21d

monitoring-influxdb ClusterIP 10.103.131.36 <none> 8086/TCP 153m

看下图说明,heapster此时可以调通

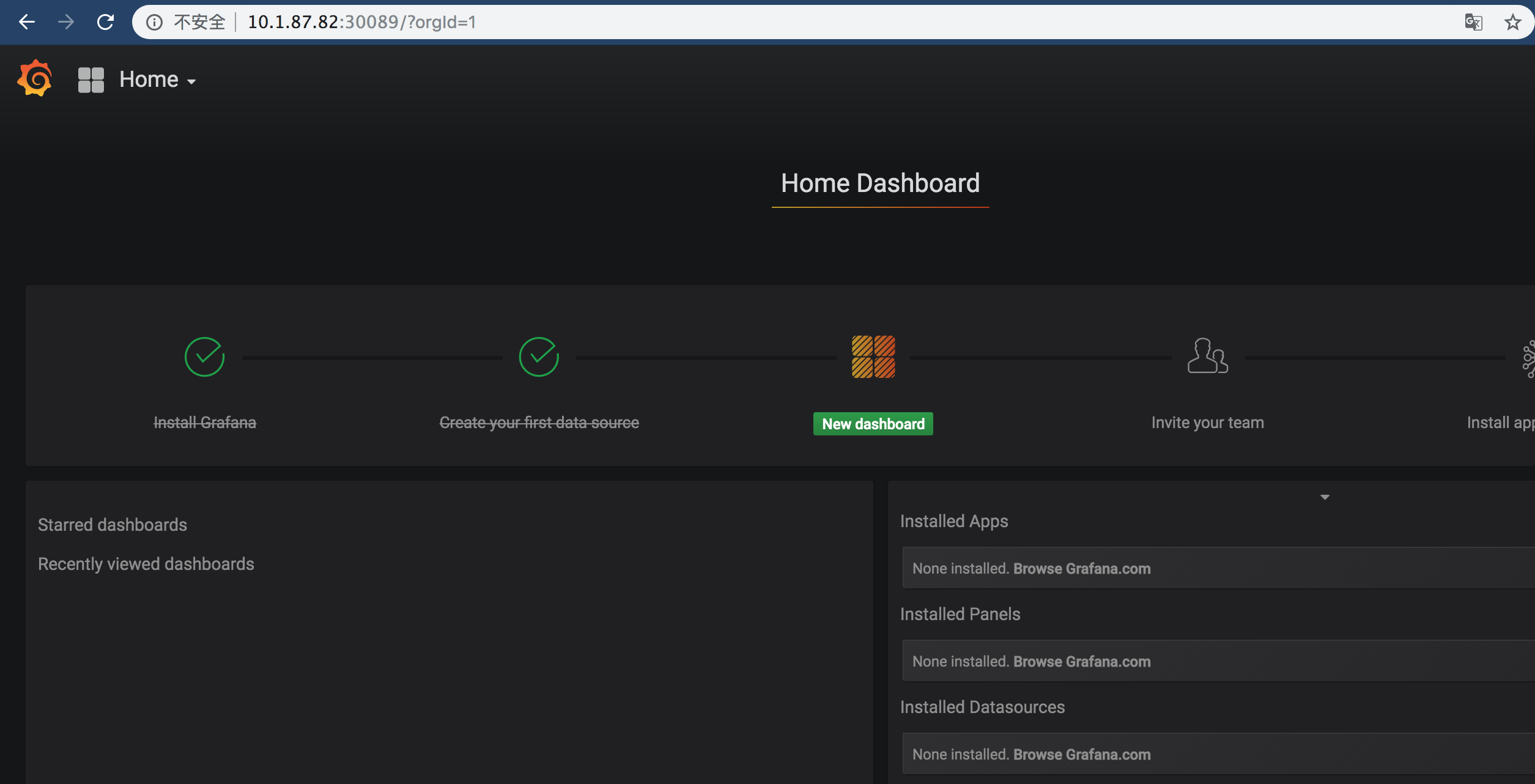

grafana配置

# wget https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/influxdb/grafana.yaml

# vim grafana.yaml # 修改以下内容

apiVersion: apps/v1

spec:

replicas: 1

selector:

matchLabels:

task: monitoring

k8s-app: grafana

image: registry.cn-hangzhou.aliyuncs.com/google_containers/heapster-grafana-amd64:v5.0.4

ports:

- port: 80

targetPort: 3000

type: NodePort

# kubectl apply -f grafana.yaml