RHCA436-基于CentOS8pacemaker+corosync 仲裁机制

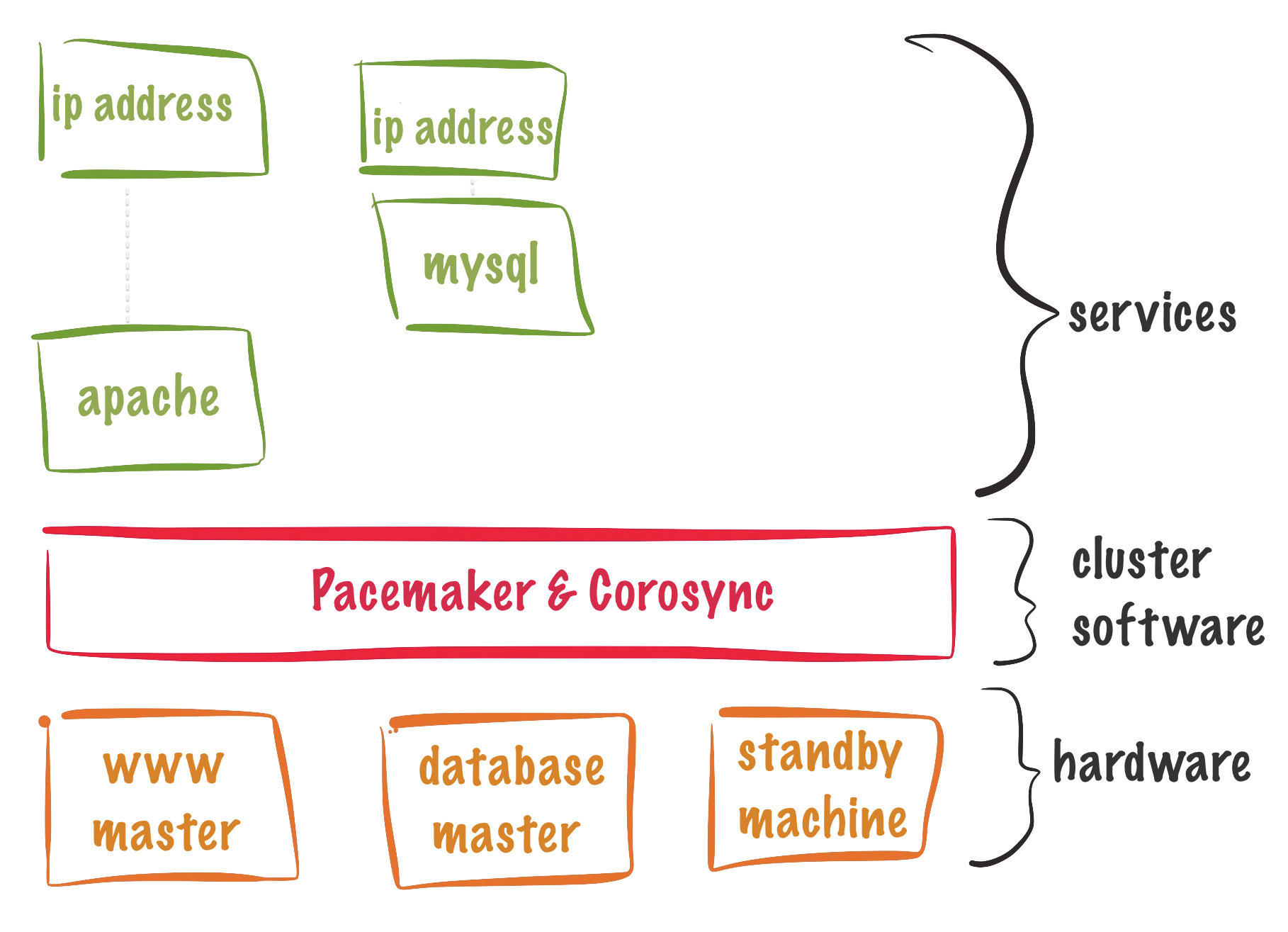

一、仲裁原理

仲裁投票(Quorum Voting)决定集群的健康状况,或使故障自动转移,或使集群离线。当集群中的结点发生故障时,会由其他结点接手继续提供服务,不过,当结点之间通信出现问题,或大多数结点发生故障时,集群就会停止服务,可是集群可以容忍多少个结点发生故障呢?这要由仲裁配置(Quorum Configuration)决定,仲裁配置使用多数(Majority)原则,只要集群中健康运行的结点数量达到仲裁规定的数量(多数结点投赞成票),集群就会继续提供服务,否则集群就停止提供服务。在停止提供服务期间,正常结点持续监控故障结点是否恢复正常,一旦正常结点的数量恢复到仲裁规定的数量,集群就恢复正常,继续提供服务。集群的仲裁投票默认是启用的。

二、计算票数

floor是向下取整,2.5取2,2.9也取2

三、4节点集群查看仲裁情况

[root@nodea ~]# corosync-quorumtool

Quorum information

------------------

Date: Thu Feb 10 16:25:53 2022

Quorum provider: corosync_votequorum

Nodes: 4 #集群内的所有存活节点

Node ID: 1 #在哪个节点上查看的

Ring ID: 1.57

Quorate: Yes #代表仲裁生效

Votequorum information

----------------------

Expected votes: 4 **#期待票数,集群所有参与投票的节点数量,不会变**

Highest expected: 4 **#最高票数**

Total votes: 4 ** ****#总票数,是指所有存活节点的数量**

Quorum: 3 **#集群存活,最少需要三个节点在线,=(期待票数/2+1)=4/2+1=3**

** #3节点时,这里是2**

Flags: Quorate **#该状态表示集群存活**

Membership information

----------------------

Nodeid Votes Name

1 1 nodea.private.example.com (local)

2 1 nodeb.private.example.com

3 1 nodec.private.example.com

4 1 noded.private.example.com

[root@nodea ~]# pcs stonith

* fence_nodea (stonith:fence_ipmilan): Started nodea.private.example.com

* fence_nodeb (stonith:fence_ipmilan): Started nodeb.private.example.com

* fence_nodec (stonith:fence_ipmilan): Started nodec.private.example.com

* fence_noded (stonith:fence_ipmilan): Started nodea.private.example.com

[root@nodea ~]# pcs quorum status

4节点集群,半数节点故障,集群处于不可用状态

[root@nodea ~]# corosync-quorumtool

Quorum information

------------------

Date: Thu Feb 10 16:30:28 2022

Quorum provider: corosync_votequorum

Nodes: 2

Node ID: 1

Ring ID: 1.68

Quorate: No #2节点,仲裁不生效了

Votequorum information

----------------------

Expected votes: 4 #期待票数不会变,还是4

Highest expected: 4

Total votes: 2

Quorum: 3 Activity blocked

Flags: #集群不可用

Membership information

----------------------

Nodeid Votes Name

1 1 nodea.private.example.com (local)

2 1 nodeb.private.example.com

[root@nodea ~]# pcs status

Cluster name: mucluster

Cluster Summary:

* Stack: corosync

* Current DC: nodeb.private.example.com (version 2.0.4-6.el8-2deceaa3ae) - partition WITHOUT quorum

* Last updated: Thu Feb 10 16:31:31 2022

* Last change: Thu Feb 10 15:55:21 2022 by root via cibadmin on nodea.private.example.com

* 4 nodes configured

* 4 resource instances configured

Node List:

* Online: [ nodea.private.example.com nodeb.private.example.com ]

* OFFLINE: [ nodec.private.example.com noded.private.example.com ]

Full List of Resources:

* fence_nodea (stonith:fence_ipmilan): Stopped fence也挂了**?**

* fence_nodeb (stonith:fence_ipmilan): Stopped

* fence_nodec (stonith:fence_ipmilan): Stopped

* fence_noded (stonith:fence_ipmilan): Stopped

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

3节点时仲裁状态

1.先移除节点

[root@nodea ~]# pcs cluster stop noded.private.example.com

[root@nodea ~]# pcs cluster node remove noded.private.example.com

2.查看仲裁状态

[root@nodea ~]# corosync-quorumtool

Quorum information

------------------

Date: Thu Feb 10 16:43:29 2022

Quorum provider: corosync_votequorum

Nodes: 3

Node ID: 1

Ring ID: 1.74

Quorate: Yes

Votequorum information

----------------------

Expected votes: 3

Highest expected: 3

Total votes: 3

Quorum: 2 **#至少两个节点存活,集群才会存活**

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

1 1 nodea.private.example.com (local)

2 1 nodeb.private.example.com

3 1 nodec.private.example.com

仲裁参数

man 5 votequorum

--auto_tie_breaker : 当出现脑裂时,票数时50%对50%,那么节点id小的一边正常工作,相当于静态优先级

演示: pcs quorum update auto_tie_breaker=1

down两个节点,集群还是正常运行,仲裁也生效,如果只剩一个节点了,则不生效了,就需要加--two_node参数

--wait_for_all : 集群在第一次启动时,等待所有的节点都启动后再计算仲裁,否则一旦访问不到节点就fence,那么重启的节点永远会起不来

--two_node : 针对集群只有2个节点时,如果有一个节点故障,期待票数会设置为1,也可以继续提供服务,当开启--wait_for_all 时,该参数功能自动开启,当节点数大于2时,该参数功能自动关闭

--last_man_standing : 开启该功能,集群会每10s钟计算一次期待票数,特别时针对集群降级时,一台一台进行下线时,期待票数会很快的进行计算,期待票数的随着集群节点的减少而逐渐降低,直到只剩下2台为止

说明:

不加此参数,如果集群内有节点故障,那么期待票数不会变,可能会导致超过半数节点故障时,集群将不再工作

加上此参数,如果集群内有节点故障,那么期待票数会每隔10s钟重新计算直到只剩2台,即使节点故障也不会导致集群不工作的场景,如果只剩2台节点,则需要仲裁服务器来解决集群不工作的场景,或者下面的那个参数,下面参数无法解决脑裂问题,仲裁服务器可以解决

修改仲裁参数

- 打印仲裁参数

[root@nodea ~]# pcs quorum config

Options:

[root@nodea ~]# vim /etc/corosync/corosync.conf

quorum {

provider: corosync_votequorum

}

- 先停止集群

[root@nodea ~]# pcs cluster stop --all

- 命令行修改仲裁参数,所有节点都会生效

#命令行修改参数

[root@nodea ~]# pcs quorum update auto_tie_breaker=1 wait_for_all=1 last_man_standing=1

Checking corosync is not running on nodes...

nodea.private.example.com: corosync is not running

noded.private.example.com: corosync is not running

nodeb.private.example.com: corosync is not running

nodec.private.example.com: corosync is not running

Sending updated corosync.conf to nodes...

nodea.private.example.com: Succeeded

nodeb.private.example.com: Succeeded

nodec.private.example.com: Succeeded

noded.private.example.com: Succeeded

#查看仲裁参数

[root@nodea ~]# pcs quorum config

Options:

auto_tie_breaker: 1

last_man_standing: 1

wait_for_all: 1

#查看配置文件已被修改

[root@nodea ~]# cat /etc/corosync/corosync.conf

quorum {

provider: corosync_votequorum

last_man_standing: 1

wait_for_all: 1

auto_tie_breaker: 1

}

- 配置文件修改参数

#修改配置文件

[root@nodea ~]# cat /etc/corosync/corosync.conf

quorum {

provider: corosync_votequorum

last_man_standing: 1

wait_for_all: 1

auto_tie_breaker: 1

}

#同步配置文件到其他节点

[root@nodea ~]# pcs cluster sync

nodea.private.example.com: Succeeded

nodeb.private.example.com: Succeeded

nodec.private.example.com: Succeeded

noded.private.example.com: Succeeded

#查看仲裁参数

[root@nodea ~]# pcs quorum config

Options:

auto_tie_breaker: 1

last_man_standing: 1

wait_for_all: 1

- 重新启动集群

[root@nodea ~]# pcs cluster start --all

- 查看仲裁结果

[root@nodea ~]# corosync-quorumtool

Quorum information

------------------

Date: Fri Feb 11 06:28:19 2022

Quorum provider: corosync_votequorum

Nodes: 4

Node ID: 1

Ring ID: 1.a8

Quorate: Yes

Votequorum information

----------------------

Expected votes: 4 ** ****#期待票数为4**

Highest expected: 4

Total votes: 4

Quorum: 3

Flags: Quorate **WaitForAll LastManStanding AutoTieBreaker #多了这些参数**

Membership information

----------------------

Nodeid Votes Name

1 1 nodea.private.example.com (local)

2 1 nodeb.private.example.com

3 1 nodec.private.example.com

4 1 noded.private.example.com

- 测试4节点集群,故障1台节点后,期待票数变化

#noded关机

[root@noded ~]# init 0

#再查看期待票数重新计算

[root@nodea ~]# corosync-quorumtool

Quorum information

------------------

Date: Fri Feb 11 06:29:30 2022

Quorum provider: corosync_votequorum

Nodes: 3

Node ID: 1

Ring ID: 1.ac

Quorate: Yes

Votequorum information

----------------------

Expected votes: 3 ** ****#期待票数重新计算为3**

Highest expected: 3

Total votes: 3

Quorum: 2

Flags: Quorate WaitForAll LastManStanding AutoTieBreaker

Membership information

----------------------

Nodeid Votes Name

1 1 nodea.private.example.com (local)

2 1 nodeb.private.example.com

3 1 nodec.private.example.com

- 测试4节点集群,故障2台节点,集群仍然正常工作

[root@nodea ~]# corosync-quorumtool

Quorum information

------------------

Date: Fri Feb 11 06:32:07 2022

Quorum provider: corosync_votequorum

Nodes: 2

Node ID: 1

Ring ID: 1.b0

Quorate: Yes ** ****#状态依然为仲裁 **

Votequorum information

----------------------

Expected votes: 2 **#期待票数变为2,集群仍然继续工作**

Highest expected: 2

Total votes: 2

Quorum: 2

Flags: Quorate WaitForAll LastManStanding AutoTieBreaker

Membership information

----------------------

Nodeid Votes Name

1 1 nodea.private.example.com (local)

2 1 nodeb.private.example.com

[root@nodea ~]# pcs cluster status

Cluster Status:

Cluster Summary:

* Stack: corosync

* Current DC: nodeb.private.example.com (version 2.0.4-6.el8-2deceaa3ae) - partition with quorum

* Last updated: Fri Feb 11 06:32:33 2022

* Last change: Fri Feb 11 06:17:33 2022 by hacluster via crmd on nodea.private.example.com

* 4 nodes configured

* 4 resource instances configured

Node List:

* Online: [ nodea.private.example.com nodeb.private.example.com ]

* OFFLINE: [ nodec.private.example.com noded.private.example.com ]

PCSD Status:

nodea.private.example.com: Online

nodeb.private.example.com: Online

noded.private.example.com: Offline

nodec.private.example.com: Offline

**#fence也是正常的**

[root@nodea ~]# pcs status

Full List of Resources:

* fence_nodea (stonith:fence_ipmilan): Started nodea.private.example.com

* fence_nodeb (stonith:fence_ipmilan): Started nodeb.private.example.com

* fence_nodec (stonith:fence_ipmilan): Started nodea.private.example.com

* fence_noded (stonith:fence_ipmilan): Started nodeb.private.example.com

9.只剩下1个节点,集群仍然正常工作

[root@nodea ~]# corosync-quorumtool

Quorum information

------------------

Date: Fri Feb 11 06:36:45 2022

Quorum provider: corosync_votequorum

Nodes: 1

Node ID: 1

Ring ID: 1.b4

Quorate: Yes

Votequorum information

----------------------

Expected votes: 1

Highest expected: 1

Total votes: 1

Quorum: 1

Flags: Quorate WaitForAll LastManStanding AutoTieBreaker

Membership information

----------------------

Nodeid Votes Name

1 1 nodea.private.example.com (local)

**#fence也是正常的,如果fence显示stop,可以用pcs stonith refresh 刷新一下**

[root@nodea ~]# pcs status

Full List of Resources:

* fence_nodea (stonith:fence_ipmilan): Started nodea.private.example.com

* fence_nodeb (stonith:fence_ipmilan): Started nodeb.private.example.com

* fence_nodec (stonith:fence_ipmilan): Started nodea.private.example.com

* fence_noded (stonith:fence_ipmilan): Started nodeb.private.example.com

说明:如果不加仲裁参数,超过半数节点故障,则集群不可用,fence也会故障

问题:解决2节点集群不工作(不工作是因为怕脑裂)的场景,可以用到仲裁服务器或者仲裁磁盘实现