RHCA436-基于CentOS8pacemaker+corosync iscsi与多路径配置

iscsi配置

- 服务端配置

yum -y install targetcli

systemctl enable target

systemctl start target

firewall-cmd --permanent --add-service=iscsi-target

firewall-cmd --reload

targetcli

/> /backstores/block create dev=/dev/vdb name=storage1

/> /iscsi create wwn=iqn.2020-02.example.com:storage1

/> /iscsi/iqn.2020-02.example.com:storage1/tpg1/luns create /backstores/block/storage1

/> /iscsi/iqn.2020-02.example.com:storage1/tpg1/acls create iqn.1994-05.com.redhat:56e1dd2dcc2c

/> saveconfig

/> exit

systemctl restart target

- 所有客户端操作

yum -y install iscsi-initiator-utils

cat /etc/iscsi/initiatorname.iscsi #查看iqn,如果修改了iqn,需要重启iscsid服务

iscsiadm -m discovery -t st -p 192.168.1.15

iscsiadm -m discovery -t st -p 192.168.2.15

iscsiadm -m node -T iqn.2020-02.example.com:storage1 -l

lsblk #查看有两块磁盘

iscsiadm -m node -T iqn.2020-02.example.com:storage1 -u #登出

iscsiadm -m node -T iqn.2020-02.example.com:storage1 -o -delete #删除链路

- 查看链路状况

[root@nodea ~]# iscsiadm -m session

tcp: [1] 192.168.1.15:3260,1 iqn.2020-02.example.com:storage1 (non-flash)

tcp: [2] 192.168.2.15:3260,1 iqn.2020-02.example.com:storage1 (non-flash)

[root@nodea ~]# iscsiadm -m session --print 1

Target: iqn.2020-02.example.com:storage1 (non-flash)

Current Portal: 192.168.1.15:3260,1

Persistent Portal: 192.168.1.15:3260,1

**********

Interface:

**********

Iface Name: default

Iface Transport: tcp

Iface Initiatorname: iqn.1994-05.com.redhat:56e1dd2dcc2c

Iface IPaddress: 192.168.1.10

Iface HWaddress: default

Iface Netdev: default

SID: 1

iSCSI Connection State: LOGGED IN

iSCSI Session State: LOGGED_IN

Internal iscsid Session State: NO CHANGE

[root@nodeb ~]# udevadm info /dev/sda

S: disk/by-id/wwn-0x60014058c13f0abb6e443c39c364f0b0

E: ID_SERIAL=360014058c13f0abb6e443c39c364f0b0 #设备WWID

使设备生效

udevadm settle

partprobe

主机和存储之间链路故障,集群是不会检测到的

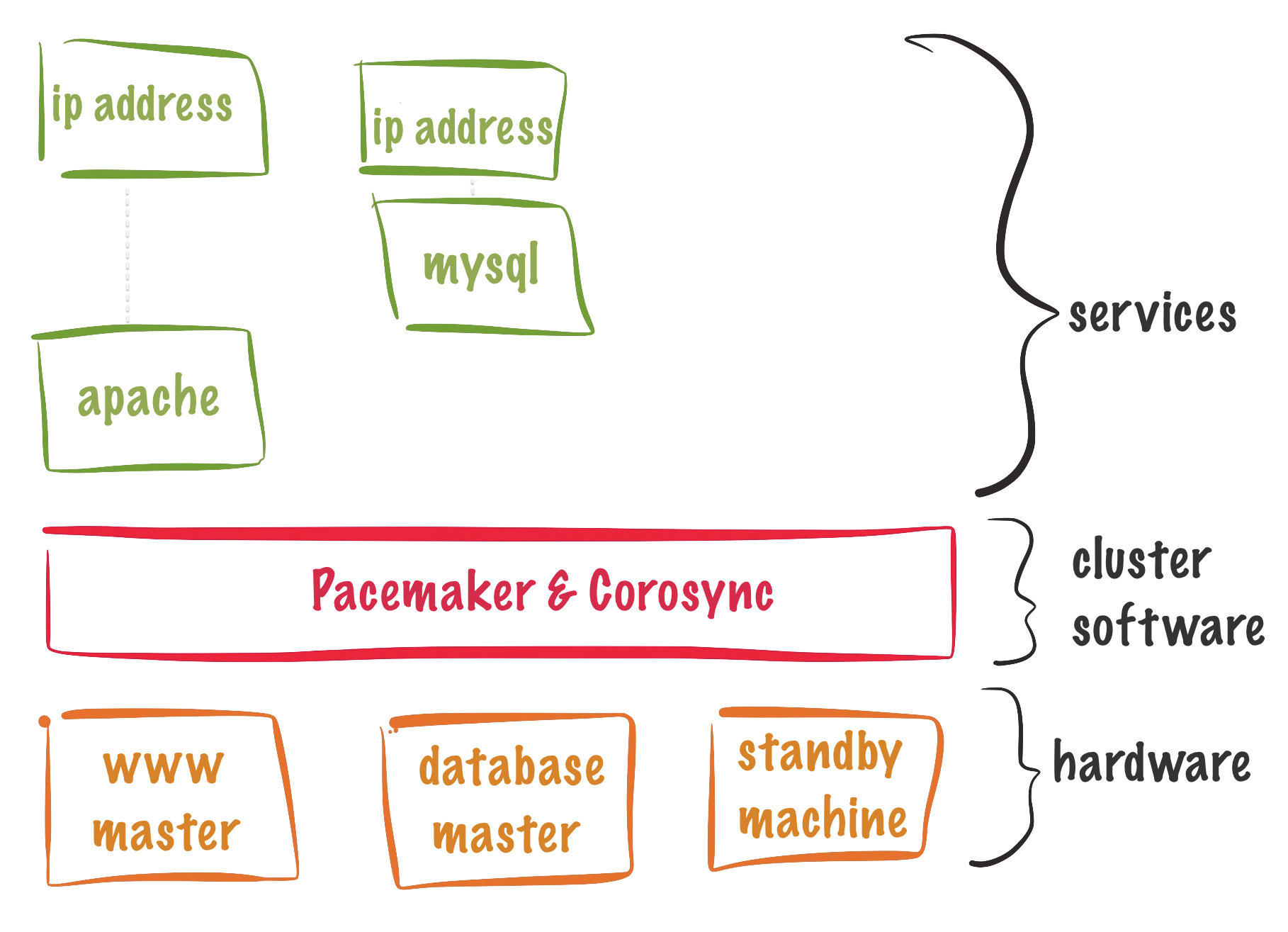

多路径配置

安装多路径

yum -y install device-mapper-multipath

systemctl enable --now multipathd

生成配置文件

mpathconf --enable --with_multipathd y

ll /etc/multipath.conf

配置参数说明,查看帮助man multipath.conf

multipath -t 列出更多的配置参数

1)defaults:全局属性的默认设置,优先级最低

2)multipaths:多路径相关配置,优先级最高

3)devices:存储设备相关配置,会覆盖defaults默认配置

4)blacklist:黑名单,multipath会忽略黑名单中的设备。

5)blacklist_exceptions:免除黑名单,加入黑名单内,但包含在这里的设备不会被忽略,是多路径管理的设备列表。

defaults配置

[root@nodea ~]# multipath -t | head -n 40

defaults {

verbosity 2

polling_interval 5 #链路探测最短周期

max_polling_interval 20 #最大链路探测时间间隔

user_friendly_names "yes" #命名友好,否则用wwid命名设备

find_multipaths: 默认值no,这将为黑名单外的所有设备创建多路径设备。如置为yes,则将为3种场景创建多路径设备:不在黑名单的两个路径的wwid相同;用户手动强制创建;一个路径的wwid与之前已经创建的多路径设备相同。

path_grouping_policy:路径分组策略,其中,“failover” 表示一条路径一个组(默认值);“multibus”表示所有路径在一个组;

path_selector:路径选择算法,其中,“round-robin 0”表示在多个路径间不断循环;“queue-length 0”表示选择当前处理IO数最少的路径;“service-time 0”表示选择IO服务时间最短的路径。

**注意:该参数是针对组内的链路选择算法**

prio:路径优先级获取方法,其中,“const”返回1(默认值);“emc”为emc盘阵生成优先级;“alua”基于SCSI-3 ALUA配置生成优先级;

编写案例

vim /etc/multipath.conf

defaults {

user_friendly_names yes

polling_interval 30

}

blacklist {

device {

vendor ".*" #正则表达式,^sda表示除了sda的设备

}

}

blacklist_exceptions {

device {

vendor "^NETAPP"

}

}

multipaths{

multipath {

wwid 360014058c13f0abb6e443c39c364f0b0

alias storage1

path_grouping_policy multibus #设置所有的链路在一个组内

}

}

multipath配置

vim /etc/multipath.conf

multipaths{

multipath {

wwid 360014058c13f0abb6e443c39c364f0b0

# wwid通过udevadm info /dev/sda | grep ID_SERIAL 查找

alias storage1

}

}

验证语法是否有效,没有任何输入代表配置没有问题

multipath

重启服务

systemctl restart multipathd

设备生效

ll /dev/mapper/storage1

查看拓扑结构

multipath -ll

查看语法和拓扑

[root@nodea ~]# multipath -ll

storage1 (360014058c13f0abb6e443c39c364f0b0) dm-0 LIO-ORG,storage1

size=10G features='0' hwhandler='1 alua' wp=rw

|-+- policy='service-time 0' prio=0 status=**active**

| `- 7:0:0:0 sdb 8:16 active undef running

`-+- policy='service-time 0' prio=0 status=**enabled**

`- 6:0:0:0 sda 8:0 active undef running

说明:两条链路分别在两个组内,组之间是主备模式

active : 主

enabled : 备

service-time 0 是组内的链路选择算法,不是组间,主备是组间关系

测试

通过udevadm info /dev/sda和/dev/sdb可以查出设备对应的链路

[root@nodea ~]# udevadm info /dev/sda

ID_PATH=ip-192.168.2.15:3260-iscsi-iqn.2020-02.example.com:storage1-lun-0

192.168.1.15 /dev/sdb

192.168.2.15 /dev/sda

通过multipath -ll查看链路状态,sdb是主

[root@nodea ~]# multipath -ll

storage1 (360014058c13f0abb6e443c39c364f0b0) dm-0 LIO-ORG,storage1

size=10G features='0' hwhandler='1 alua' wp=rw

|-+- policy='service-time 0' prio=0 status=active

| `- 7:0:0:0 sdb 8:16 active undef running

`-+- policy='service-time 0' prio=0 status=enabled

`- 6:0:0:0 sda 8:0 active undef running

断开sdb对应的链路192.168.1.15,主断开,则io会卡住一会,需要切换

断开sda对应的链路192.168.2.15,主断开,则io不受影响

nmcli device disconnect eth2 #对应192.168.1.15

nmcli device disconnect eth3 #对应192.168.2.15

测试

mount /dev/mapper/storage1p1 /dev/storage1

echo xxxx > /storage1

设置组内链路选择算法

修改配置文件

vim /etc/multipath.conf

multipaths {

multipath {

wwid "360014058c13f0abb6e443c39c364f0b0"

alias "storage1"

path_grouping_policy "multibus" #所有链路在一个组内

path_selector "round-robin 0" #改成轮循

}

}

[root@nodea ~]# systemctl restart multipathd

[root@nodea ~]# multipath -ll

storage1 (360014058c13f0abb6e443c39c364f0b0) dm-0 LIO-ORG,storage1

size=10G features='0' hwhandler='1 alua' wp=rw

`-+- policy='round-robin 0' prio=50 status=active

|- 6:0:0:0 sdb 8:16 active ready running

`- 7:0:0:0 sda 8:0 active ready running

说明:组内的两个链路是轮循

测试

[root@nodea ~]# dd if=/dev/zero of=/storage1/test2 bs=1M count=1000

[root@nodea ~]# iostat -d 1

Device tps kB_read/s kB_wrtn/s kB_read kB_wrtn

vda 4.00 0.00 236.00 0 236

sdb 56.00 0.00 71680.00 0 71680

sda 56.00 0.00 43008.00 0 43008

dm-0 112.00 0.00 114688.00 0 114688

dm-1 56.00 0.00 114688.00 0 114688

删除链路

multipath -F

测试存储路径断开,是否会影响集群

[root@nodea ~]# pcs resource config

Group: mygroup

Resource: myfs (class=ocf provider=heartbeat type=Filesystem)

Attributes: device=/dev/mapper/storage1p1 directory=/var/www/html fstype=xfs

Operations: monitor interval=20s timeout=40s (myfs-monitor-interval-20s)

start interval=0s timeout=60s (myfs-start-interval-0s)

stop interval=0s timeout=60s (myfs-stop-interval-0s)

Resource: myip (class=ocf provider=heartbeat type=IPaddr2)

Attributes: cidr_netmask=24 ip=172.25.250.99

Operations: monitor interval=10s timeout=20s (myip-monitor-interval-10s)

start interval=0s timeout=20s (myip-start-interval-0s)

stop interval=0s timeout=20s (myip-stop-interval-0s)

Resource: myweb (class=systemd type=httpd)

Operations: monitor interval=60 timeout=100 (myweb-monitor-interval-60)

start interval=0s timeout=100 (myweb-start-interval-0s)

stop interval=0s timeout=100 (myweb-stop-interval-0s)

[root@nodea ~]# pcs resource

* Resource Group: mygroup:

* myfs (ocf::heartbeat:Filesystem): Started nodea.private.example.com

* myip (ocf::heartbeat:IPaddr2): Started nodea.private.example.com

* myweb (systemd:httpd): Started nodea.private.example.com

down掉nodea上面的两条存储链路再测试

[root@nodea ~]# nmcli device disconnect eth2

Device 'eth2' successfully disconnected.

[root@nodea ~]# nmcli device disconnect eth3

Device 'eth3' successfully disconnected.

结果:集群没有切走,说明集群无法检测主机到存储之间的链路