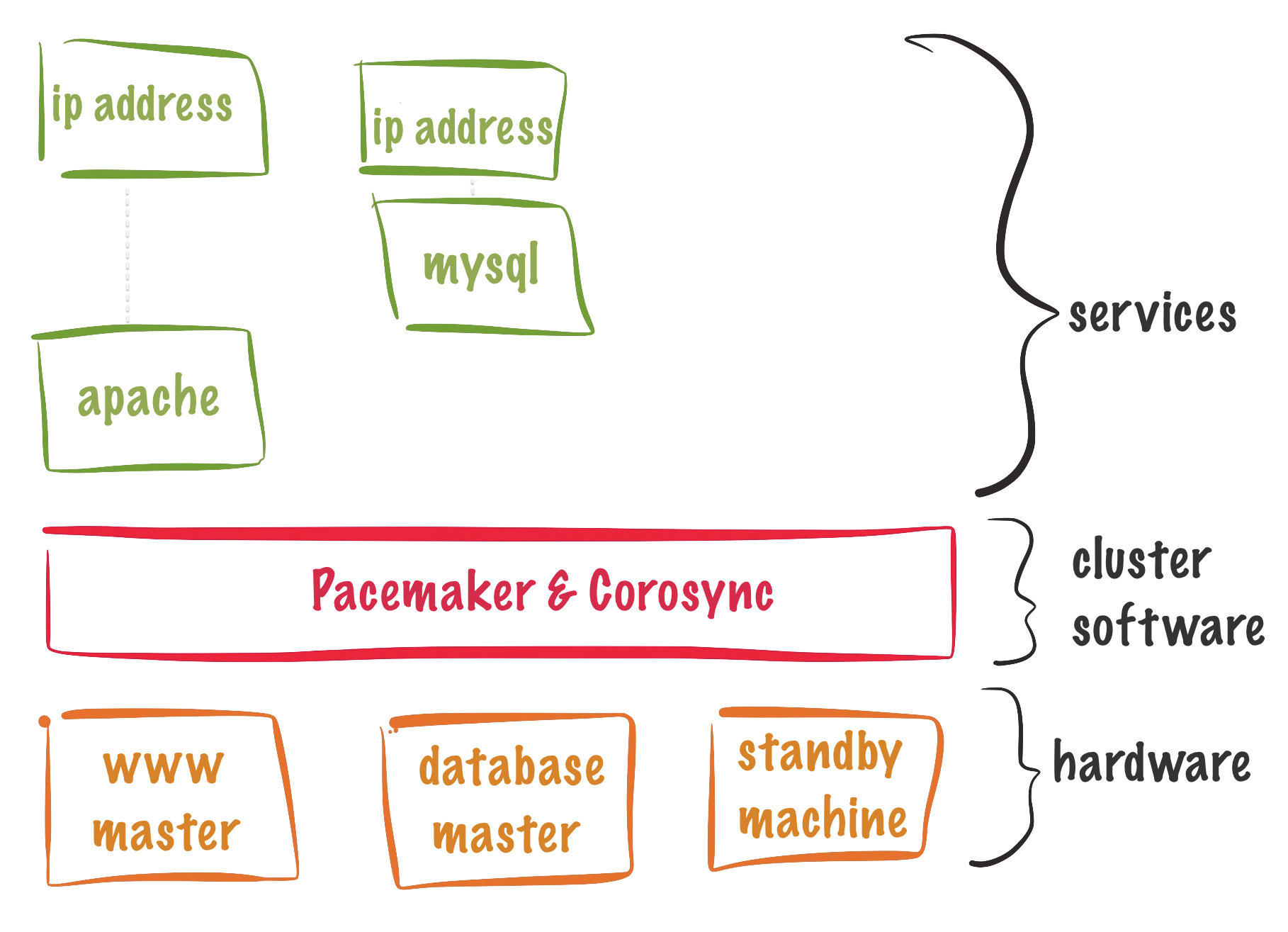

RHCA436-基于CentOS8pacemaker+corosync gfs2集群文件系统

配置集群文件系统

准备工作,指在红帽实验环境

lab start gfs-create

1.设置集群状态

pcs property set no-quorum-policy=freeze

pcs property 查看配置

pcs property list --all

pcs property show property

man pacemaker-schedulerd 查看帮助

no-quorum-policy = enum [stop]

What to do when the cluster does not have quorum

What to do when the cluster does not have quorum Allowed values: stop, freeze, ignore, demote, suicide

no-quorum-policy表示当达到小于最低仲裁数量时,默认集群会停止所有资源,freeze表示已经运行的resource还是运行的,但是不能加入新的resource了,集群保持原有状态,直到仲裁恢复;ignore表示忽略,继续运行

2.所有节点安装软件包

yum -y install gfs2-utils

3.格式化文件系统

mkfs.gfs2 -j 4 -t cluster1:gfsdata /dev/sharevg/sharelv1

-t:指定集群名称

-j:指定日志数量,不指定默认是1

-J:指定日志大小,最小和默认都是8M,最大1G,红帽推荐大于128M

#查看日志大小

gfs2_edit -p journals /dev/mapper/sharevg-sharelv1

Block #Journal Status: of 524288 (0x80000)

-------------------- Journal List --------------------

journal0: 0x12 8MB clean.

journal1: 0x81b 8MB dirty.

journal2: 0x1024 8MB clean.

journal3: 0x182d 8MB clean.

------------------------------------------------------

#查看集群信息

[root@nodec ~]# tunegfs2 -l /dev/sharevg/sharelv1

tunegfs2 (Jun 9 2020 13:30:19)

File system volume name: cluster1:gfsdata

File system UUID: 9194a2ca-da3b-41bc-9611-a97a7db658e2

File system magic number: 0x1161970

Block size: 4096

Block shift: 12

Root inode: 9284

Master inode: 2072

Lock protocol: lock_dlm

Lock table: cluster1:gfsdata

4.创建文件系统资源

pcs resource create clusterfs Filesystem device=/dev/sharevg/sharelv1 directory=/srv/data fstype=gfs2 op monitor interval=10s on-fail=fence --group=LVMshared

5.查看资源状态

pcs status --full

6.查看挂载情况

[root@nodea ~]# mount | grep gfs2

/dev/mapper/sharevg-sharelv1 on /srv/data type gfs2 (rw,relatime,seclabel)

[root@nodeb ~]# mount | grep gfs2

/dev/mapper/sharevg-sharelv1 on /srv/data type gfs2 (rw,relatime,seclabel)

[root@nodec ~]# mount | grep gfs2

/dev/mapper/sharevg-sharelv1 on /srv/data type gfs2 (rw,relatime,seclabel)

7.扩展文件系统

[root@nodea ~]# lvextend -L 2G /dev/sharevg/sharelv1

[root@nodea ~]# gfs2_grow -T /dev/sharevg/sharelv1

[root@nodea ~]# df -hT | grep gfs2

/dev/mapper/sharevg-sharelv1 gfs2 2.0G 37M 2.0G 2% /srv/data

[root@nodeb ~]# df -hT | grep gfs2

/dev/mapper/sharevg-sharelv1 gfs2 2.0G 37M 2.0G 2% /srv/data

[root@nodec ~]# df -hT | grep gfs2

/dev/mapper/sharevg-sharelv1 gfs2 2.0G 37M 2.0G 2% /srv/data

8.修改集群文件系统标签

tunegfs2 -o locktable=cluster1:gfs /dev/sharevg/sharelv1

tunegfs2 -l /dev/sharevg/sharelv1 | grep 'Lock table'

Lock table: cluster1:gfsdata

配置安装httpd服务

在三个node中执行:

yum install httpd -y

firewall-cmd --add-service=http --permanent

firewall-cmd --reload

ssh root@nodea

mkdir /var/www/html

echo "RH436 Training" > /var/www/html/index.html

restorcon -Rv /var/www/html

配置服务和ip资源

pcs resource create webip IPaddr2 ip=172.25.250.80 cidr_netmask=24 --group=myweb

pcs resource create webserver apache --group=myweb

配置资源启动顺序

pcs constraint order start LVMshared-clone then myweb